Selecting Relevant Features from a Universal Representation for Few-shot Classification

Paper and Code

Mar 20, 2020

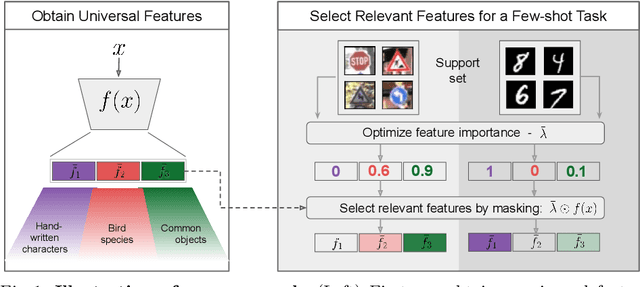

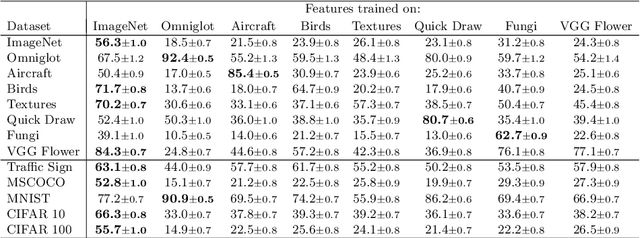

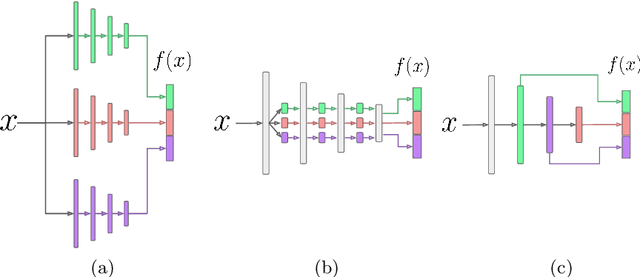

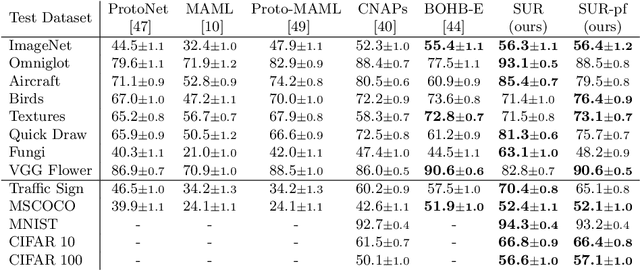

Popular approaches for few-shot classification consist of first learning a generic data representation based on a large annotated dataset, before adapting the representation to new classes given only a few labeled samples. In this work, we propose a new strategy based on feature selection, which is both simpler and more effective than previous feature adaptation approaches. First, we obtain a universal representation by training a set of semantically different feature extractors. Then, given a few-shot learning task, we use our universal feature bank to automatically select the most relevant representations. We show that a simple non-parametric classifier built on top of such features produces high accuracy and generalizes to domains never seen during training, which leads to state-of-the-art results on MetaDataset and improved accuracy on mini-ImageNet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge