Segmentation of Infrared Breast Images Using MultiResUnet Neural Network

Paper and Code

Oct 31, 2020

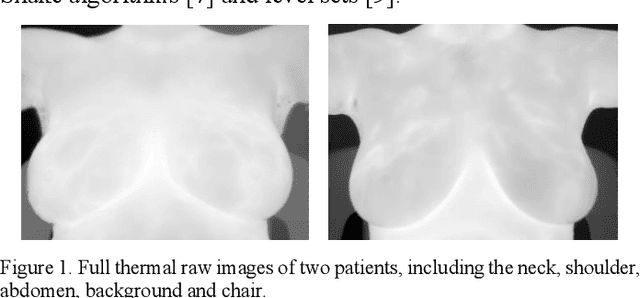

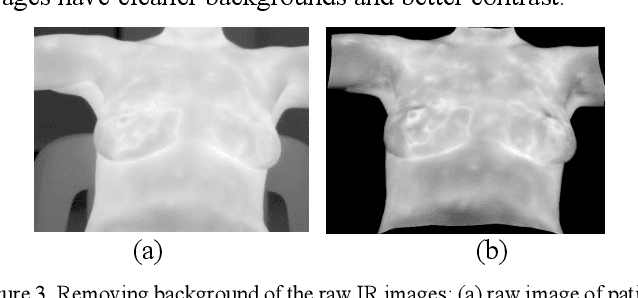

Breast cancer is the second leading cause of death for women in the U.S. Early detection of breast cancer is key to higher survival rates of breast cancer patients. We are investigating infrared (IR) thermography as a noninvasive adjunct to mammography for breast cancer screening. IR imaging is radiation-free, pain-free, and non-contact. Automatic segmentation of the breast area from the acquired full-size breast IR images will help limit the area for tumor search, as well as reduce the time and effort costs of manual segmentation. Autoencoder-like convolutional and deconvolutional neural networks (C-DCNN) had been applied to automatically segment the breast area in IR images in previous studies. In this study, we applied a state-of-the-art deep-learning segmentation model, MultiResUnet, which consists of an encoder part to capture features and a decoder part for precise localization. It was used to segment the breast area by using a set of breast IR images, collected in our pilot study by imaging breast cancer patients and normal volunteers with a thermal infrared camera (N2 Imager). The database we used has 450 images, acquired from 14 patients and 16 volunteers. We used a thresholding method to remove interference in the raw images and remapped them from the original 16-bit to 8-bit, and then cropped and segmented the 8-bit images manually. Experiments using leave-one-out cross-validation (LOOCV) and comparison with the ground-truth images by using Tanimoto similarity show that the average accuracy of MultiResUnet is 91.47%, which is about 2% higher than that of the autoencoder. MultiResUnet offers a better approach to segment breast IR images than our previous model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge