SeasonDepth: Cross-Season Monocular Depth Prediction Dataset and Benchmark under Multiple Environments

Paper and Code

Nov 09, 2020

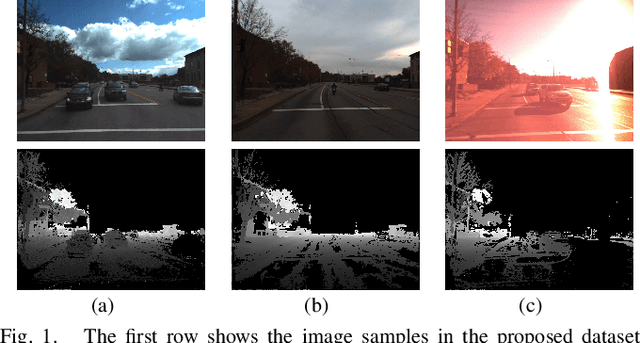

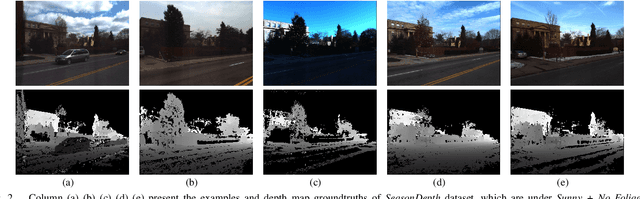

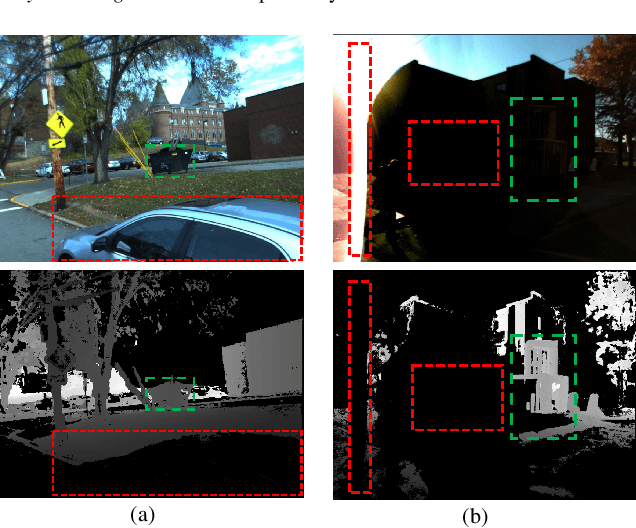

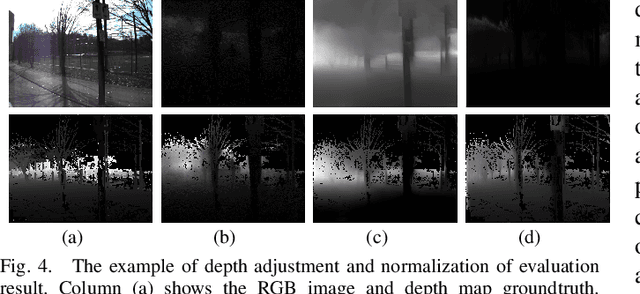

Monocular depth prediction has been well studied recently, while there are few works focused on the depth prediction across multiple environments, e.g. changing illumination and seasons, owing to the lack of such real-world dataset and benchmark. In this work, we derive a new cross-season scaleless monocular depth prediction dataset SeasonDepth from CMU Visual Localization dataset through structure from motion. And then we formulate several metrics to benchmark the performance under different environments using recent stateof-the-art open-source depth prediction pretrained models from KITTI benchmark. Through extensive zero-shot experimental evaluation on the proposed dataset, we show that the long-term monocular depth prediction is far from solved and provide promising solutions in the future work, including geometricbased or scale-invariant training. Moreover, multi-environment synthetic dataset and cross-dataset validataion are beneficial to the robustness to real-world environmental variance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge