Search Based Code Generation for Machine Learning Programs

Paper and Code

Feb 06, 2018

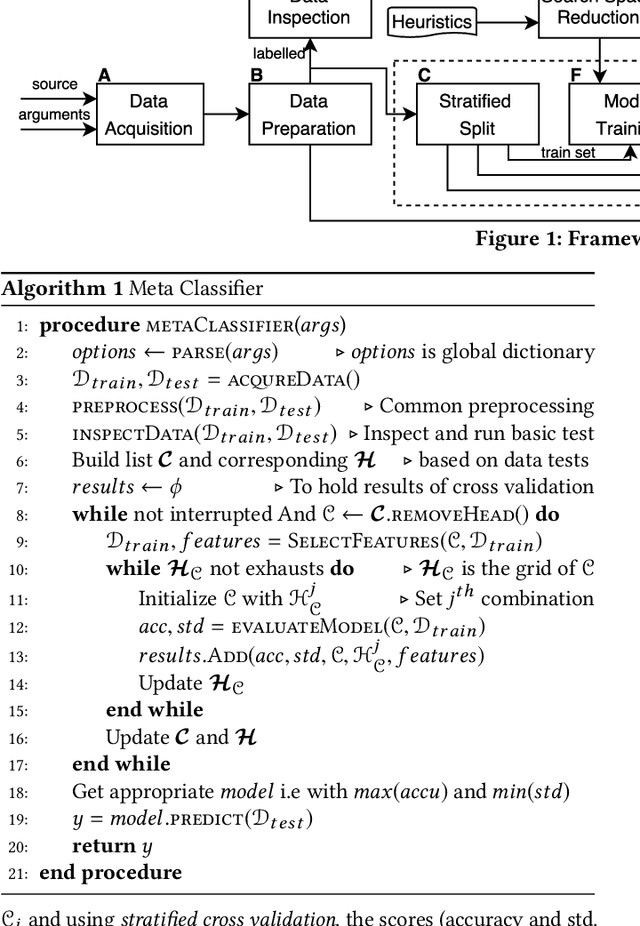

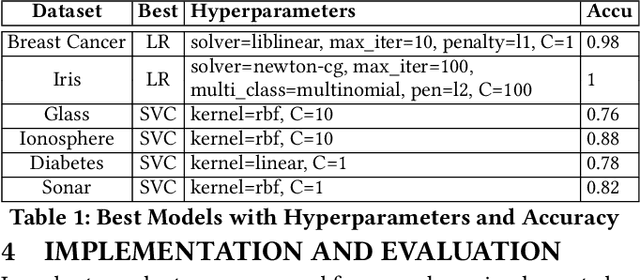

Machine Learning (ML) has revamped every domain of life as it provides powerful tools to build complex systems that learn and improve from experience and data. Our key insight is that to solve a machine learning problem, data scientists do not invent a new algorithm each time, but evaluate a range of existing models with different configurations and select the best one. This task is laborious, error-prone, and drains a large chunk of project budget and time. In this paper we present a novel framework inspired by programming by Sketching and Partial Evaluation to minimize human intervention in developing ML solutions. We templatize machine learning algorithms to expose configuration choices as holes to be searched. We share code and computation between different algorithms, and only partially evaluate configuration space of algorithms based on information gained from initial algorithm evaluations. We also employ hierarchical and heuristic based pruning to reduce the search space. Our initial findings indicate that our approach can generate highly accurate ML models. Interviews with data scientists show that they feel our framework can eliminate sources of common errors and significantly reduce development time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge