SCSS-Net: Superpoint Constrained Semi-supervised Segmentation Network for 3D Indoor Scenes

Paper and Code

Jul 09, 2021

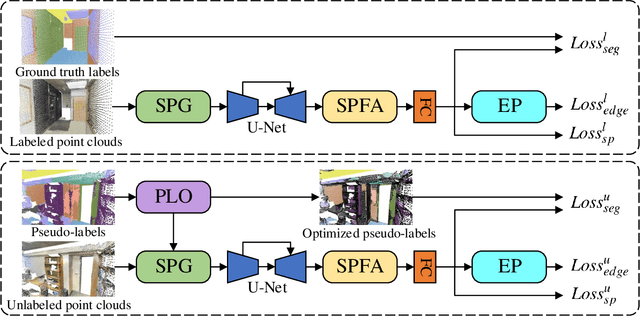

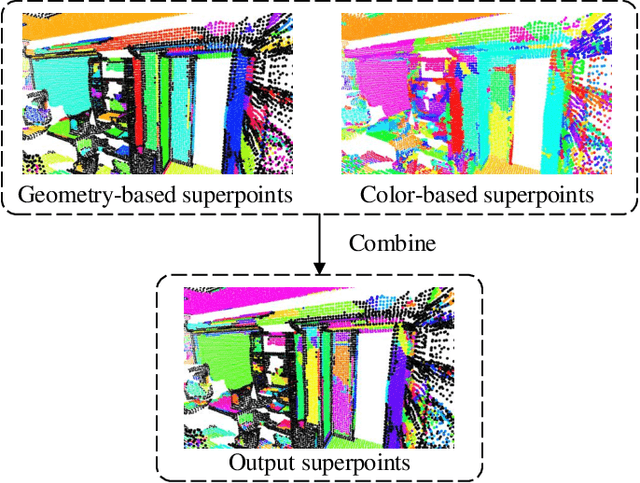

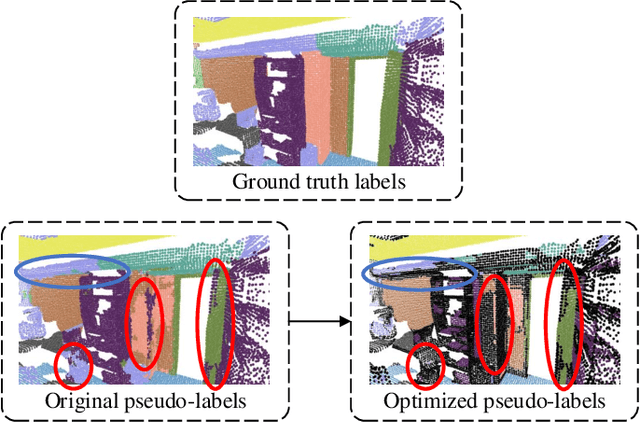

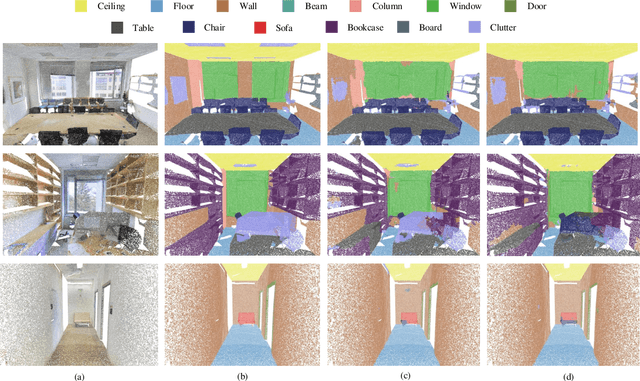

Many existing deep neural networks (DNNs) for 3D point cloud semantic segmentation require a large amount of fully labeled training data. However, manually assigning point-level labels on the complex scenes is time-consuming. While unlabeled point clouds can be easily obtained from sensors or reconstruction, we propose a superpoint constrained semi-supervised segmentation network for 3D point clouds, named as SCSS-Net. Specifically, we use the pseudo labels predicted from unlabeled point clouds for self-training, and the superpoints produced by geometry-based and color-based Region Growing algorithms are combined to modify and delete pseudo labels with low confidence. Additionally, we propose an edge prediction module to constrain the features from edge points of geometry and color. A superpoint feature aggregation module and superpoint feature consistency loss functions are introduced to smooth the point features in each superpoint. Extensive experimental results on two 3D public indoor datasets demonstrate that our method can achieve better performance than some state-of-the-art point cloud segmentation networks and some popular semi-supervised segmentation methods with few labeled scenes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge