Score Matched Conditional Exponential Families for Likelihood-Free Inference

Paper and Code

Jan 15, 2021

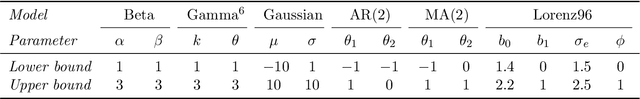

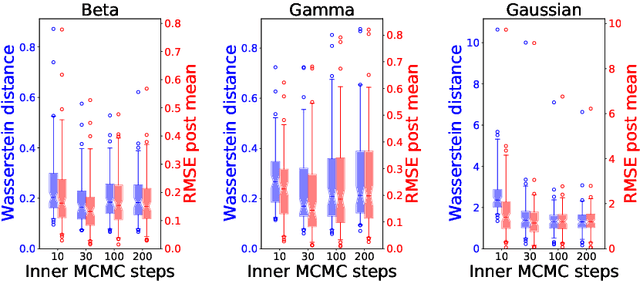

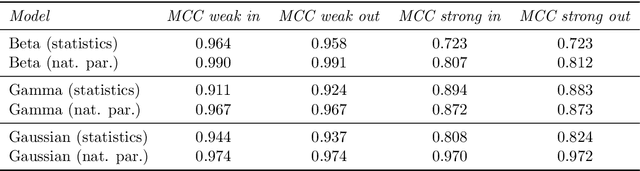

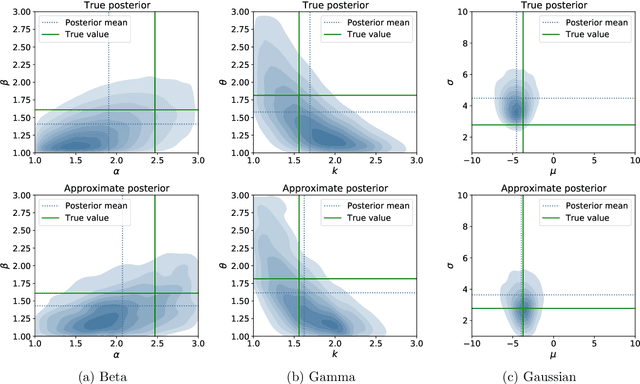

To perform Bayesian inference for stochastic simulator models for which the likelihood is not accessible, Likelihood-Free Inference (LFI) relies on simulations from the model. Standard LFI methods can be split according to how these simulations are used: to build an explicit Surrogate Likelihood, or to accept/reject parameter values according to a measure of distance from the observations (Approximate Bayesian Computation (ABC)). In both cases, simulations are adaptively tailored to the value of the observation. Here, we generate parameter-simulation pairs from the model independently on the observation, and use them to learn a conditional exponential family likelihood approximation; to parametrize it, we use Neural Networks whose weights are tuned with Score Matching. With our likelihood approximation, we can employ MCMC for doubly intractable distributions to draw samples from the posterior for any number of observations without additional model simulations, with performance competitive to comparable approaches. Further, the sufficient statistics of the exponential family can be used as summaries in ABC, outperforming the state-of-the-art method in five different models with known likelihood. Finally, we apply our method to a challenging model from meteorology.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge