Scheduling Inference Workloads on Distributed Edge Clusters with Reinforcement Learning

Paper and Code

Jan 31, 2023

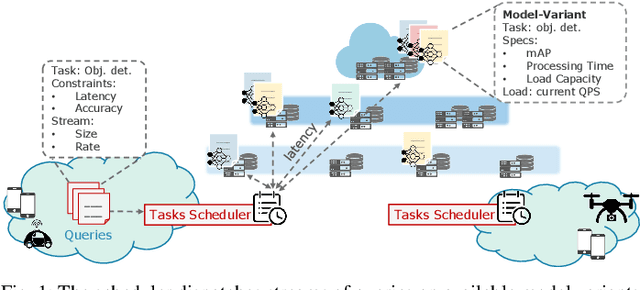

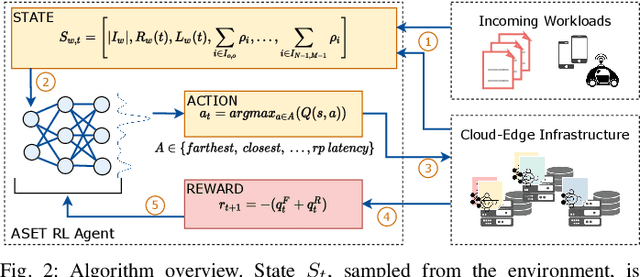

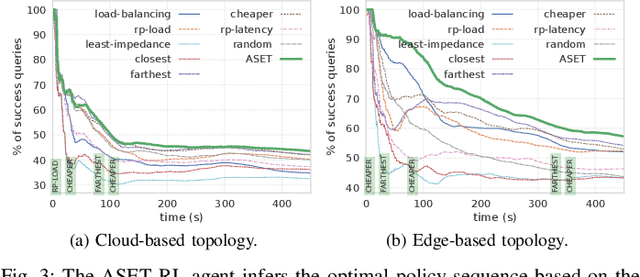

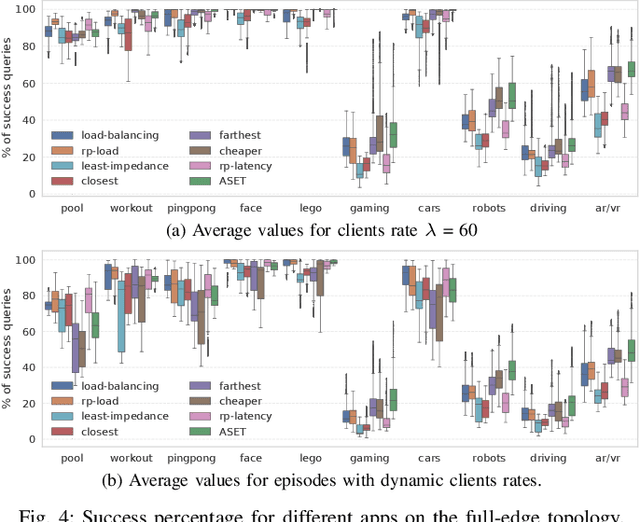

Many real-time applications (e.g., Augmented/Virtual Reality, cognitive assistance) rely on Deep Neural Networks (DNNs) to process inference tasks. Edge computing is considered a key infrastructure to deploy such applications, as moving computation close to the data sources enables us to meet stringent latency and throughput requirements. However, the constrained nature of edge networks poses several additional challenges to the management of inference workloads: edge clusters can not provide unlimited processing power to DNN models, and often a trade-off between network and processing time should be considered when it comes to end-to-end delay requirements. In this paper, we focus on the problem of scheduling inference queries on DNN models in edge networks at short timescales (i.e., few milliseconds). By means of simulations, we analyze several policies in the realistic network settings and workloads of a large ISP, highlighting the need for a dynamic scheduling policy that can adapt to network conditions and workloads. We therefore design ASET, a Reinforcement Learning based scheduling algorithm able to adapt its decisions according to the system conditions. Our results show that ASET effectively provides the best performance compared to static policies when scheduling over a distributed pool of edge resources.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge