Scatterbrained: A flexible and expandable pattern for decentralized machine learning

Paper and Code

Dec 14, 2021

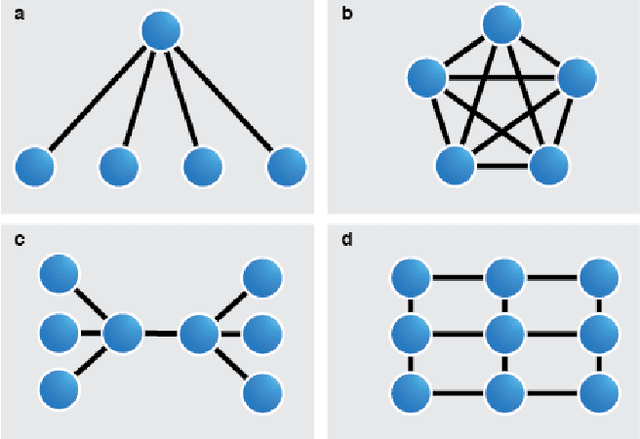

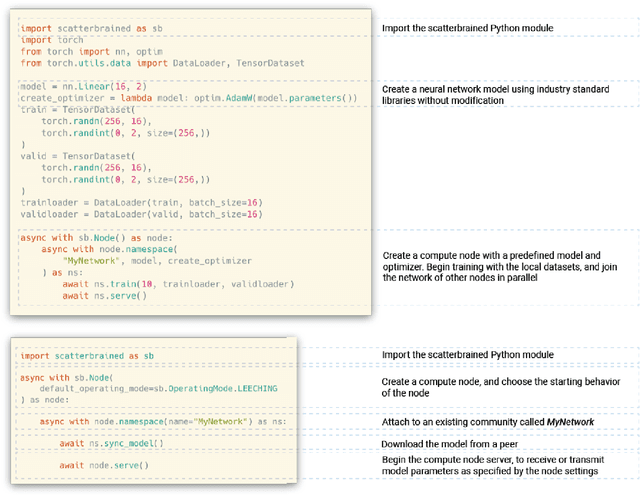

Federated machine learning is a technique for training a model across multiple devices without exchanging data between them. Because data remains local to each compute node, federated learning is well-suited for use-cases in fields where data is carefully controlled, such as medicine, or in domains with bandwidth constraints. One weakness of this approach is that most federated learning tools rely upon a central server to perform workload delegation and to produce a single shared model. Here, we suggest a flexible framework for decentralizing the federated learning pattern, and provide an open-source, reference implementation compatible with PyTorch.

* Code and documentation is available at

https://github.com/JHUAPL/scatterbrained

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge