Scaling Inference for Markov Logic with a Task-Decomposition Approach

Paper and Code

Mar 12, 2012

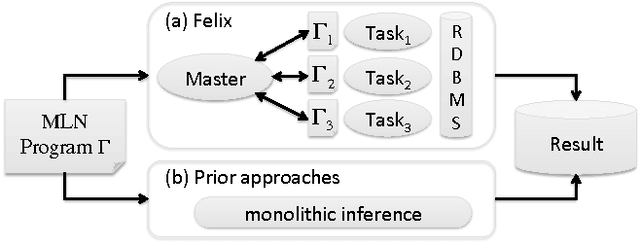

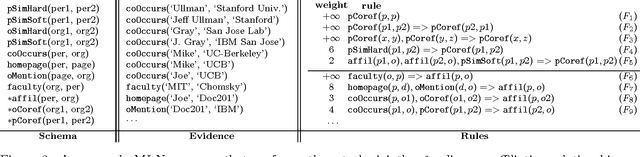

Motivated by applications in large-scale knowledge base construction, we study the problem of scaling up a sophisticated statistical inference framework called Markov Logic Networks (MLNs). Our approach, Felix, uses the idea of Lagrangian relaxation from mathematical programming to decompose a program into smaller tasks while preserving the joint-inference property of the original MLN. The advantage is that we can use highly scalable specialized algorithms for common tasks such as classification and coreference. We propose an architecture to support Lagrangian relaxation in an RDBMS which we show enables scalable joint inference for MLNs. We empirically validate that Felix is significantly more scalable and efficient than prior approaches to MLN inference by constructing a knowledge base from 1.8M documents as part of the TAC challenge. We show that Felix scales and achieves state-of-the-art quality numbers. In contrast, prior approaches do not scale even to a subset of the corpus that is three orders of magnitude smaller.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge