Scaffolding Reflection in Reinforcement Learning Framework for Confinement Escape Problem

Paper and Code

Nov 13, 2020

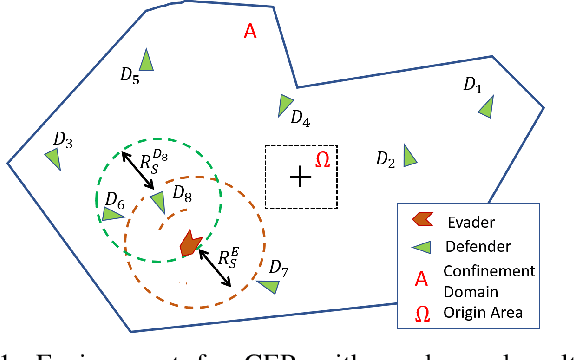

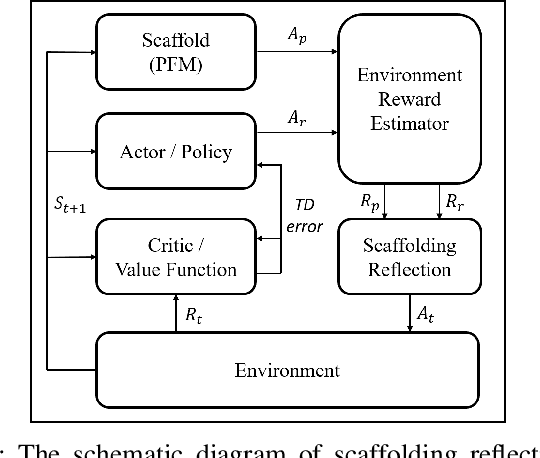

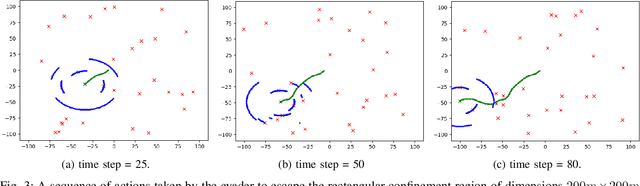

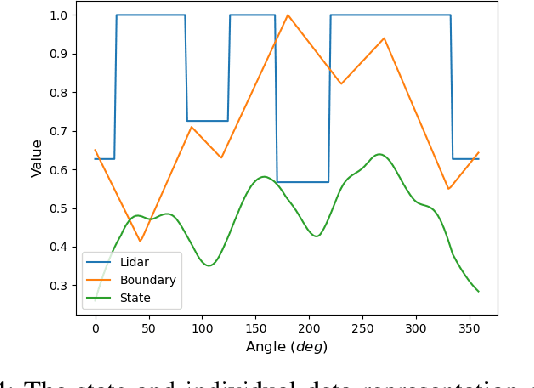

This paper formulates an application of reinforcement learning for an evader in a confinement escape problem. An evader's objective is to attempt escaping a confinement region patrolled by multiple defenders, with minimum use of energy. Meanwhile, the defenders aim to reach and capture the evader without any communication between them. The problem formulation uses the actor-critic approach for the defender. In this paper, the novel Scaffolding Reflection in Reinforcement Learning (SR2L) framework is proposed, using a potential field method as a scaffold to assist the actor's action-reflection. Through the user's clearly articulated intent, the action-reflection enables the actor to learn by observing the probable actions and their values based on experience. Extensive Monte-Carlo simulations show the performance of a trained SR2L against the baseline approach. The SR2L framework achieves at least one order fewer episodes to learn the policy than the conventional RL framework.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge