Saving Dense Retriever from Shortcut Dependency in Conversational Search

Paper and Code

Feb 15, 2022

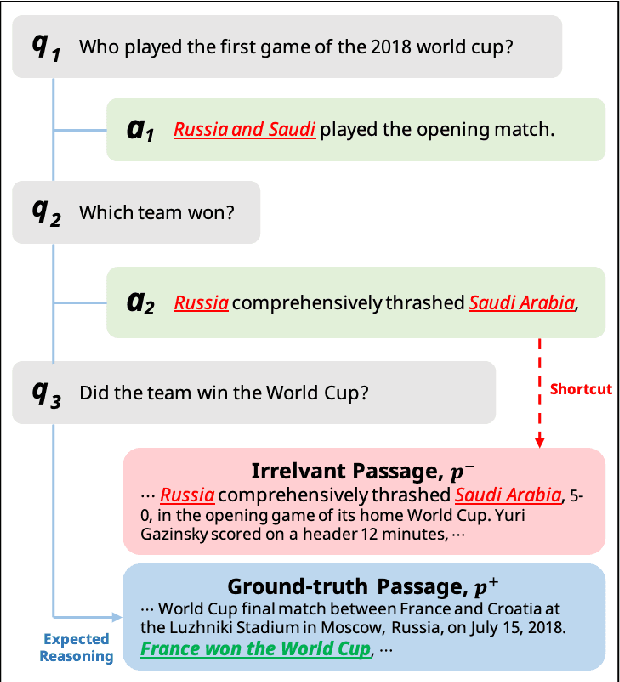

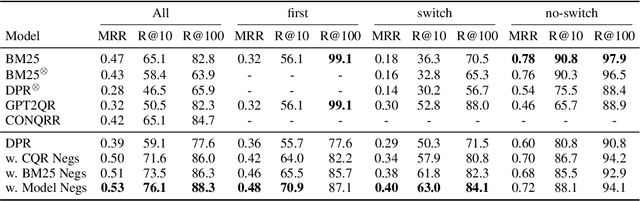

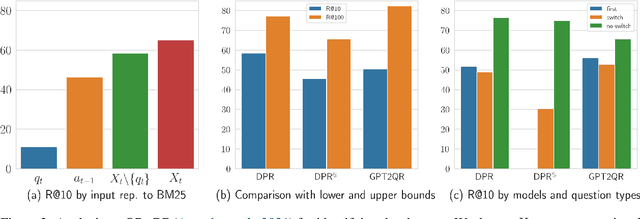

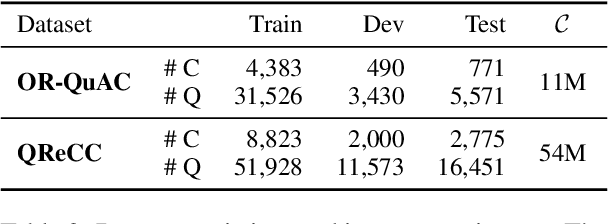

In conversational search (CS), it needs holistic understanding over conversational inputs to retrieve relevant passages. In this paper, we demonstrate the existence of a retrieval shortcut in CS, which causes models to retrieve passages solely relying on partial history while disregarding the latest question. With in-depth analysis, we first show naively trained dense retrievers heavily exploit the shortcut and hence perform poorly when asked to answer history-independent questions. To prevent models from solely relying on the shortcut, we explore iterative hard negatives mined by pre-trained dense retrievers. Experimental results show that training with the iterative hard negatives effectively mitigates the dependency on the shortcut and makes substantial improvement on recent CS benchmarks. Our retrievers achieve new state-of-the-art results, outperforming the previous best models by 9.7 in Recall@10 on QReCC and 12.4 in Recall@5 on TopiOCQA. Furthermore, in our end-to-end QA experiments, FiD readers combined with our retrievers surpass the previous state-of-the-art models by 3.7 and 1.0 EM scores on QReCC and TopiOCQA, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge