Sanity Simulations for Saliency Methods

Paper and Code

May 13, 2021

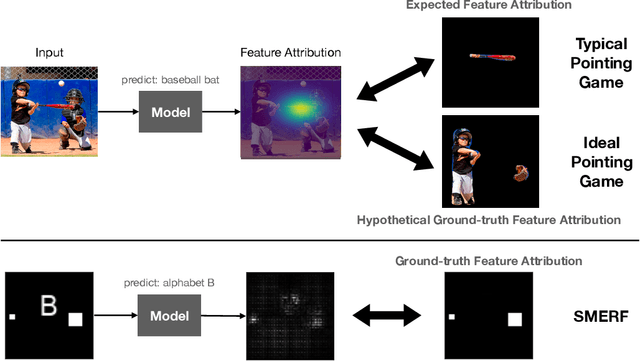

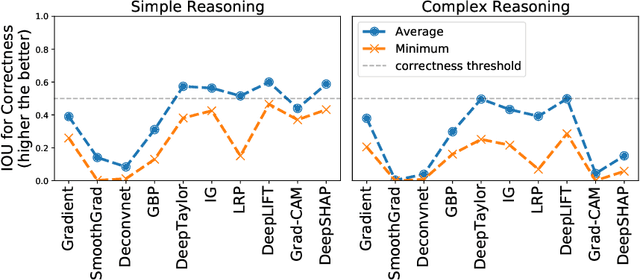

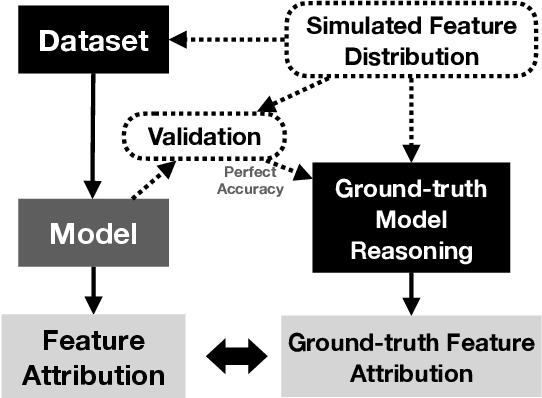

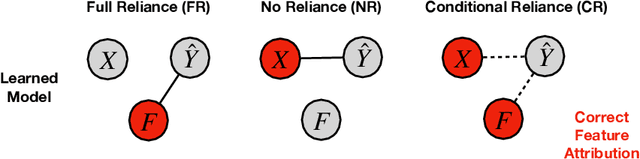

Saliency methods are a popular class of feature attribution tools that aim to capture a model's predictive reasoning by identifying "important" pixels in an input image. However, the development and adoption of saliency methods are currently hindered by the lack of access to underlying model reasoning, which prevents accurate method evaluation. In this work, we design a synthetic evaluation framework, SMERF, that allows us to perform ground-truth-based evaluation of saliency methods while controlling the underlying complexity of model reasoning. Experimental evaluations via SMERF reveal significant limitations in existing saliency methods, especially given the relative simplicity of SMERF's synthetic evaluation tasks. Moreover, the SMERF benchmarking suite represents a useful tool in the development of new saliency methods to potentially overcome these limitations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge