Sample-Efficient Learning of Mixtures

Paper and Code

Jun 03, 2018

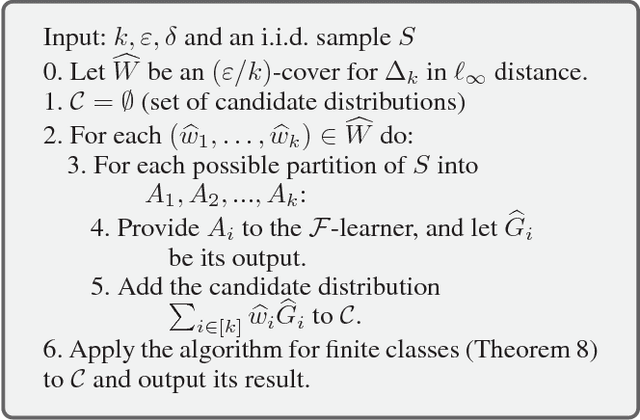

We consider PAC learning of probability distributions (a.k.a. density estimation), where we are given an i.i.d. sample generated from an unknown target distribution, and want to output a distribution that is close to the target in total variation distance. Let $\mathcal F$ be an arbitrary class of probability distributions, and let $\mathcal{F}^k$ denote the class of $k$-mixtures of elements of $\mathcal F$. Assuming the existence of a method for learning $\mathcal F$ with sample complexity $m_{\mathcal{F}}(\epsilon)$, we provide a method for learning $\mathcal F^k$ with sample complexity $O({k\log k \cdot m_{\mathcal F}(\epsilon) }/{\epsilon^{2}})$. Our mixture learning algorithm has the property that, if the $\mathcal F$-learner is proper/agnostic, then the $\mathcal F^k$-learner would be proper/agnostic as well. This general result enables us to improve the best known sample complexity upper bounds for a variety of important mixture classes. First, we show that the class of mixtures of $k$ axis-aligned Gaussians in $\mathbb{R}^d$ is PAC-learnable in the agnostic setting with $\widetilde{O}({kd}/{\epsilon ^ 4})$ samples, which is tight in $k$ and $d$ up to logarithmic factors. Second, we show that the class of mixtures of $k$ Gaussians in $\mathbb{R}^d$ is PAC-learnable in the agnostic setting with sample complexity $\widetilde{O}({kd^2}/{\epsilon ^ 4})$, which improves the previous known bounds of $\widetilde{O}({k^3d^2}/{\epsilon ^ 4})$ and $\widetilde{O}(k^4d^4/\epsilon ^ 2)$ in its dependence on $k$ and $d$. Finally, we show that the class of mixtures of $k$ log-concave distributions over $\mathbb{R}^d$ is PAC-learnable using $\widetilde{O}(d^{(d+5)/2}\epsilon^{-(d+9)/2}k)$ samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge