Safety Analysis in the Era of Large Language Models: A Case Study of STPA using ChatGPT

Paper and Code

Apr 03, 2023

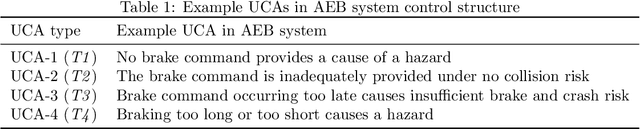

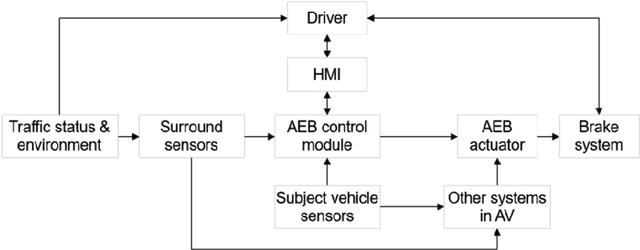

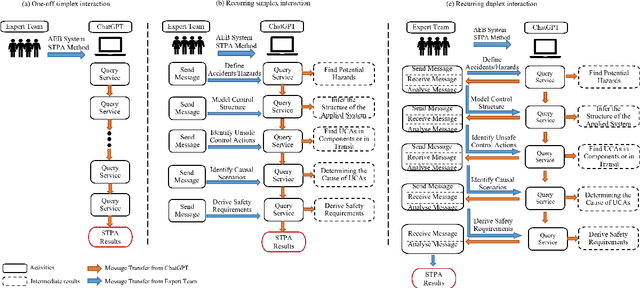

Large Language Models (LLMs), such as ChatGPT and BERT, are leading a new AI heatwave due to its human-like conversations with detailed and articulate answers across many domains of knowledge. While LLMs are being quickly applied to many AI application domains, we are interested in the following question: Can safety analysis for safety-critical systems make use of LLMs? To answer, we conduct a case study of Systems Theoretic Process Analysis (STPA) on Automatic Emergency Brake (AEB) systems using ChatGPT. STPA, one of the most prevalent techniques for hazard analysis, is known to have limitations such as high complexity and subjectivity, which this paper aims to explore the use of ChatGPT to address. Specifically, three ways of incorporating ChatGPT into STPA are investigated by considering its interaction with human experts: one-off simplex interaction, recurring simplex interaction, and recurring duplex interaction. Comparative results reveal that: (i) using ChatGPT without human experts' intervention can be inadequate due to reliability and accuracy issues of LLMs; (ii) more interactions between ChatGPT and human experts may yield better results; and (iii) using ChatGPT in STPA with extra care can outperform human safety experts alone, as demonstrated by reusing an existing comparison method with baselines. In addition to making the first attempt to apply LLMs in safety analysis, this paper also identifies key challenges (e.g., trustworthiness concern of LLMs, the need of standardisation) for future research in this direction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge