Safe Learning for Near Optimal Scheduling

Paper and Code

May 19, 2020

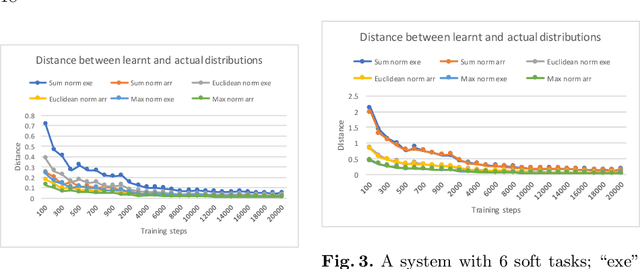

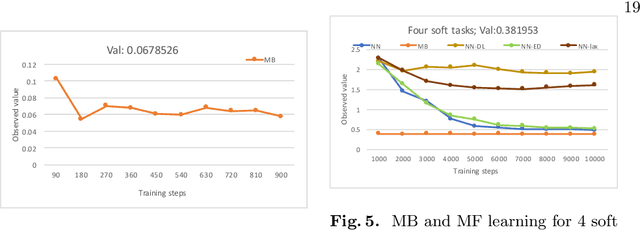

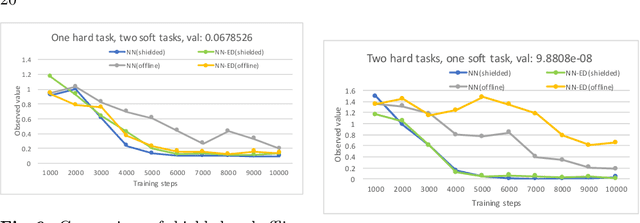

In this paper, we investigate the combination of synthesis techniques and learning techniques to obtain safe and near optimal schedulers for a preemptible task scheduling problem. We study both model-based learning techniques with PAC guarantees and model-free learning techniques based on shielded deep Q-learning. The new learning algorithms have been implemented to conduct experimental evaluations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge