S4RL: Surprisingly Simple Self-Supervision for Offline Reinforcement Learning

Paper and Code

Mar 10, 2021

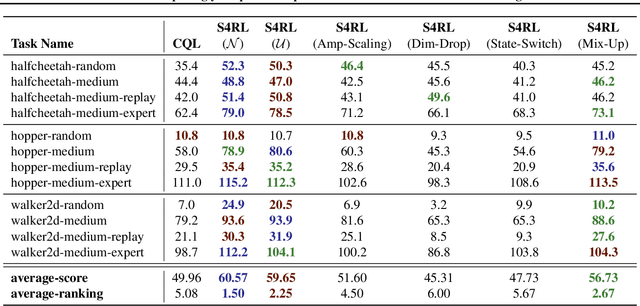

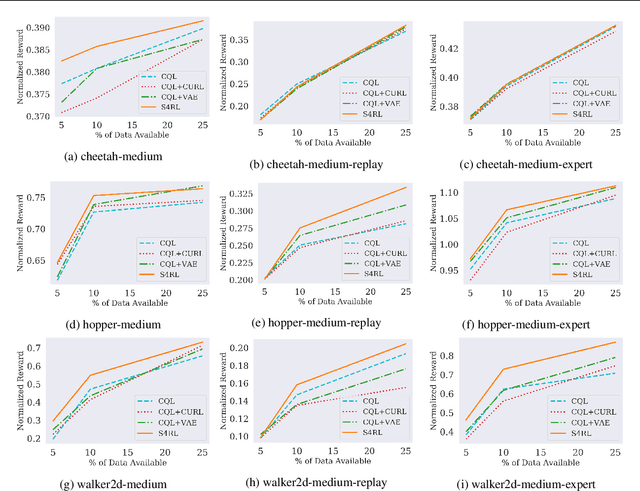

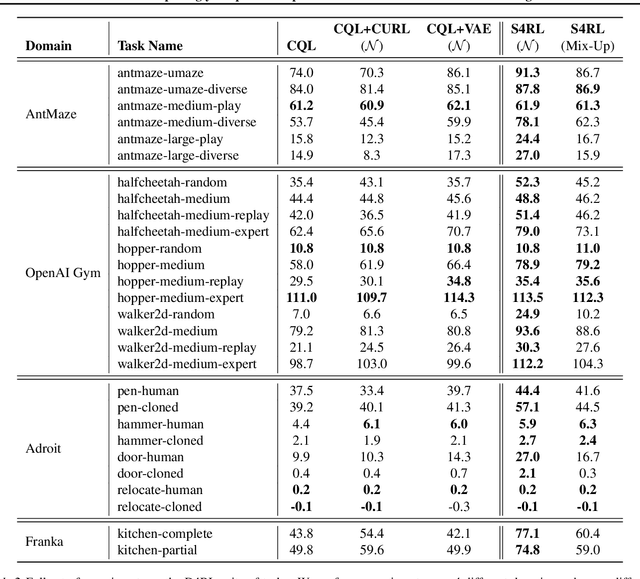

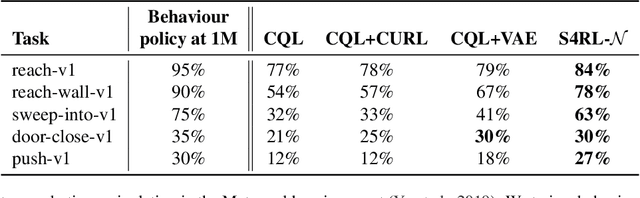

Offline reinforcement learning proposes to learn policies from large collected datasets without interaction. These algorithms have made it possible to learn useful skills from data that can then be transferred to the environment, making it feasible to deploy the trained policies in real-world settings where interactions may be costly or dangerous, such as self-driving. However, current algorithms overfit to the dataset they are trained on and perform poor out-of-distribution (OOD) generalization to the environment when deployed. We propose a Surprisingly Simple Self-Supervision algorithm (S4RL), which utilizes data augmentations from states to learn value functions that are better at generalizing and extrapolating when deployed in the environment. We investigate different data augmentation techniques that help learning a value function that can extrapolate to OOD data, and how to combine data augmentations and offline RL algorithms to learn a policy. We experimentally show that using S4RL significantly improves the state-of-the-art on most benchmark offline reinforcement learning tasks on popular benchmark datasets from D4RL, despite being simple and easy to implement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge