Robust Training with Ensemble Consensus

Paper and Code

Oct 22, 2019

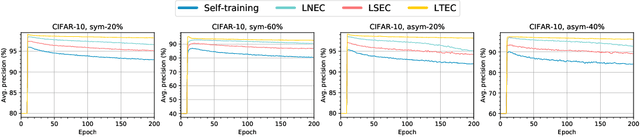

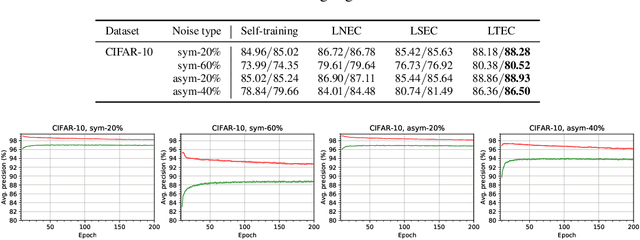

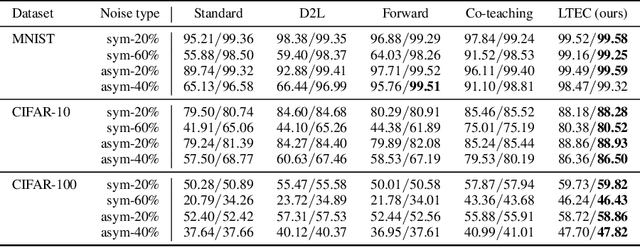

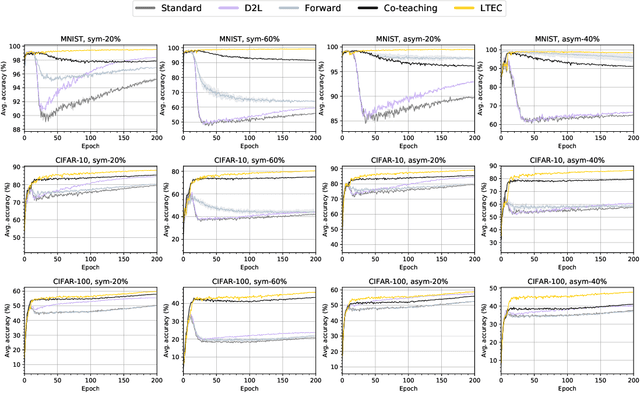

Since deep neural networks are over-parametrized, they may memorize noisy examples. We address such memorizing issue under the existence of annotation noise. From the fact that deep neural networks cannot generalize neighborhoods of the features acquired via memorization, we find that noisy examples do not consistently incur small losses on the network in the presence of perturbation. Based on this, we propose a novel training method called Learning with Ensemble Consensus (LEC) whose goal is to prevent overfitting noisy examples by eliminating them identified via consensus of an ensemble of perturbed networks. One of the proposed LECs, LTEC outperforms the current state-of-the-art methods on MNIST, CIFAR-10, and CIFAR-100 despite its efficient memory.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge