Robust Semi-Supervised Classification using GANs with Self-Organizing Maps

Paper and Code

Oct 19, 2021

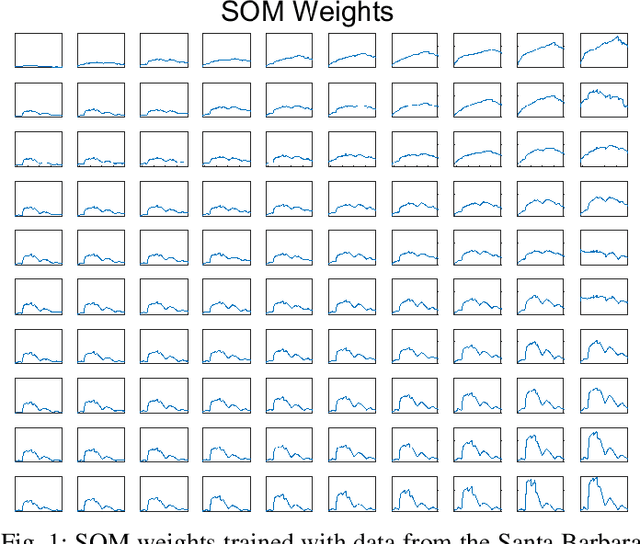

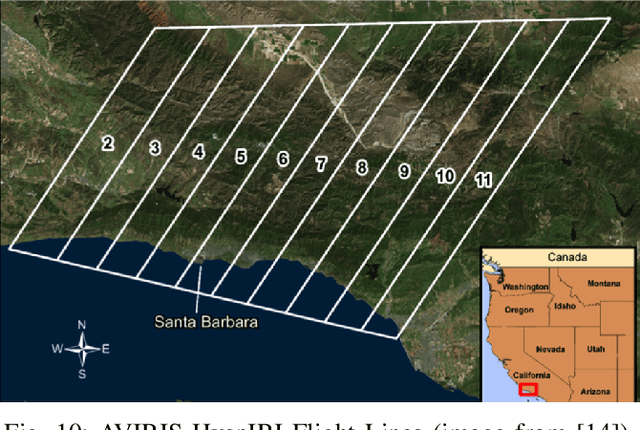

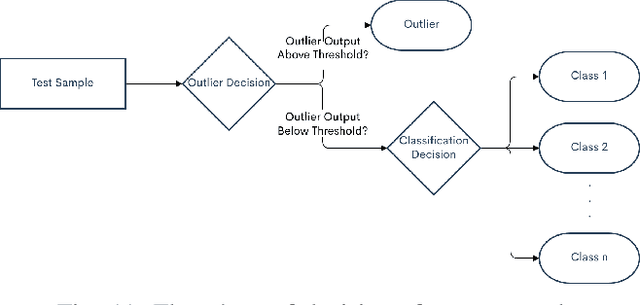

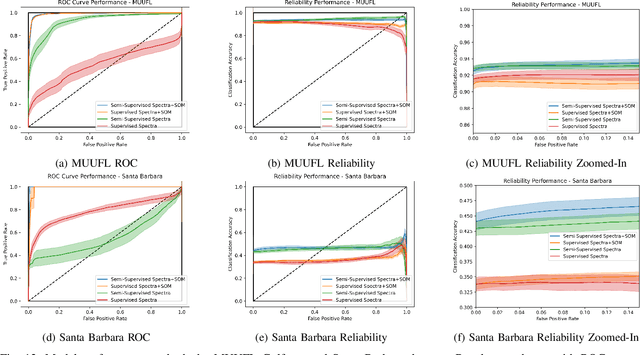

Generative adversarial networks (GANs) have shown tremendous promise in learning to generate data and effective at aiding semi-supervised classification. However, to this point, semi-supervised GAN methods make the assumption that the unlabeled data set contains only samples of the joint distribution of the classes of interest, referred to as inliers. Consequently, when presented with a sample from other distributions, referred to as outliers, GANs perform poorly at determining that it is not qualified to make a decision on the sample. The problem of discriminating outliers from inliers while maintaining classification accuracy is referred to here as the DOIC problem. In this work, we describe an architecture that combines self-organizing maps (SOMs) with SS-GANS with the goal of mitigating the DOIC problem and experimental results indicating that the architecture achieves the goal. Multiple experiments were conducted on hyperspectral image data sets. The SS-GANS performed slightly better than supervised GANS on classification problems with and without the SOM. Incorporating the SOMs into the SS-GANs and the supervised GANS led to substantially mitigation of the DOIC problem when compared to SS-GANS and GANs without the SOMs. Furthermore, the SS-GANS performed much better than GANS on the DOIC problem, even without the SOMs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge