Robust scalable initialization for Bayesian variational inference with multi-modal Laplace approximations

Paper and Code

Jul 12, 2023

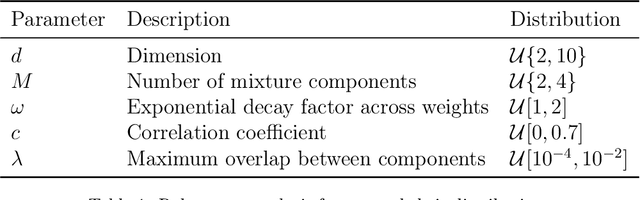

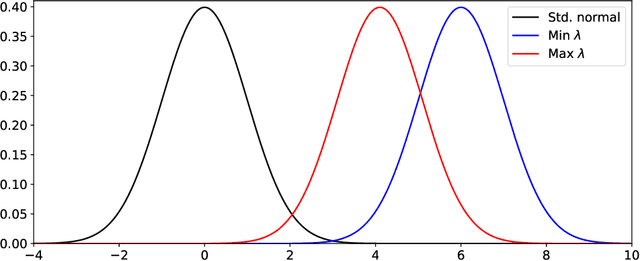

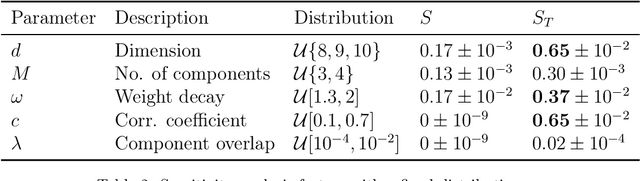

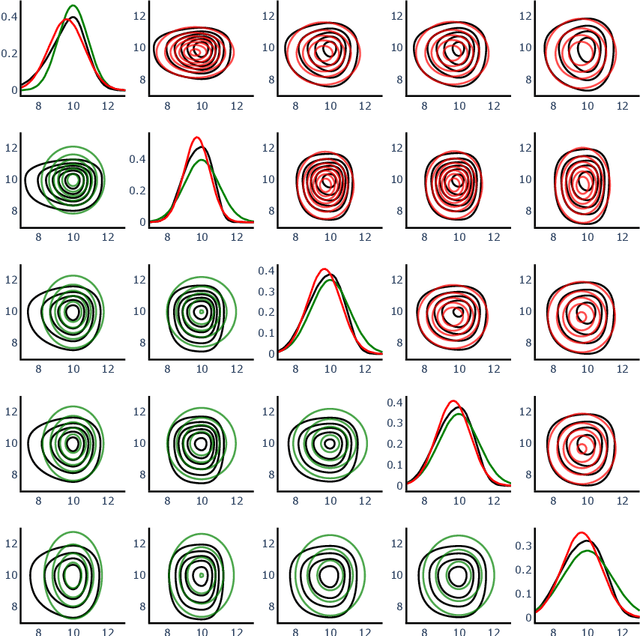

For predictive modeling relying on Bayesian inversion, fully independent, or ``mean-field'', Gaussian distributions are often used as approximate probability density functions in variational inference since the number of variational parameters is twice the number of unknown model parameters. The resulting diagonal covariance structure coupled with unimodal behavior can be too restrictive when dealing with highly non-Gaussian behavior, including multimodality. High-fidelity surrogate posteriors in the form of Gaussian mixtures can capture any distribution to an arbitrary degree of accuracy while maintaining some analytical tractability. Variational inference with Gaussian mixtures with full-covariance structures suffers from a quadratic growth in variational parameters with the number of model parameters. Coupled with the existence of multiple local minima due to nonconvex trends in the loss functions often associated with variational inference, these challenges motivate the need for robust initialization procedures to improve the performance and scalability of variational inference with mixture models. In this work, we propose a method for constructing an initial Gaussian mixture model approximation that can be used to warm-start the iterative solvers for variational inference. The procedure begins with an optimization stage in model parameter space in which local gradient-based optimization, globalized through multistart, is used to determine a set of local maxima, which we take to approximate the mixture component centers. Around each mode, a local Gaussian approximation is constructed via the Laplace method. Finally, the mixture weights are determined through constrained least squares regression. Robustness and scalability are demonstrated using synthetic tests. The methodology is applied to an inversion problem in structural dynamics involving unknown viscous damping coefficients.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge