Robust Pedestrian Attribute Recognition Using Group Sparsity for Occlusion Videos

Paper and Code

Oct 17, 2021

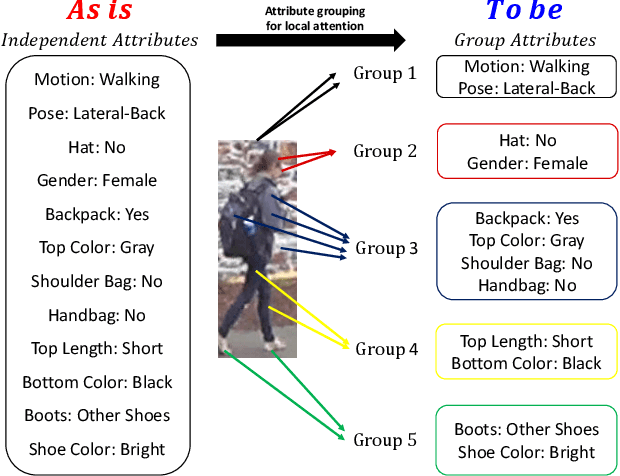

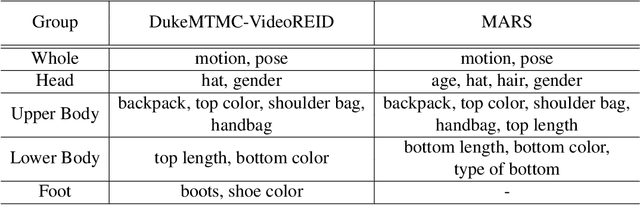

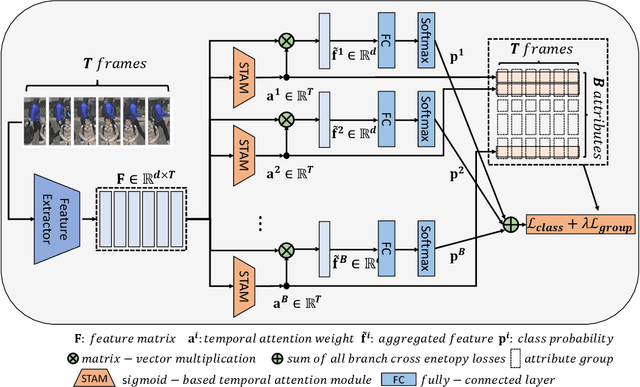

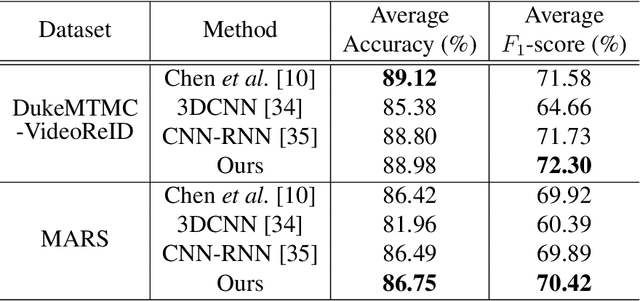

Occlusion processing is a key issue in pedestrian attribute recognition (PAR). Nevertheless, several existing video-based PAR methods have not yet considered occlusion handling in depth. In this paper, we formulate finding non-occluded frames as sparsity-based temporal attention of a crowded video. In this manner, a model is guided not to pay attention to the occluded frame. However, temporal sparsity cannot include a correlation between attributes when occlusion occurs. For example, "boots" and "shoe color" cannot be recognized when the foot is invisible. To solve the uncorrelated attention issue, we also propose a novel group sparsity-based temporal attention module. Group sparsity is applied across attention weights in correlated attributes. Thus, attention weights in a group are forced to pay attention to the same frames. Experimental results showed that the proposed method achieved a higher F1-score than the state-of-the-art methods on two video-based PAR datasets and five occlusion scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge