Robust Learning of Noisy Time Series Collections Using Stochastic Process Models with Motion Codes

Paper and Code

Feb 21, 2024

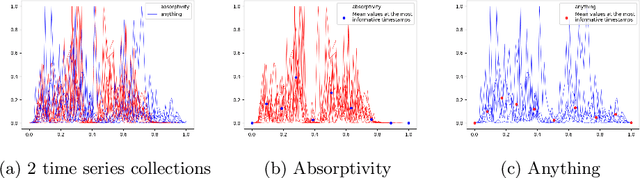

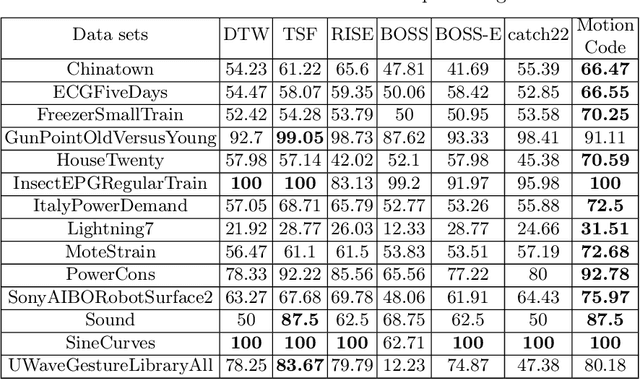

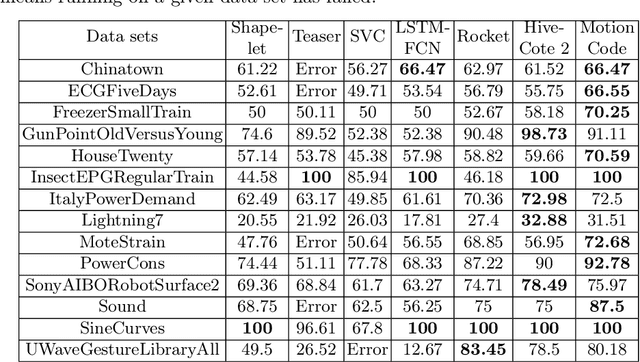

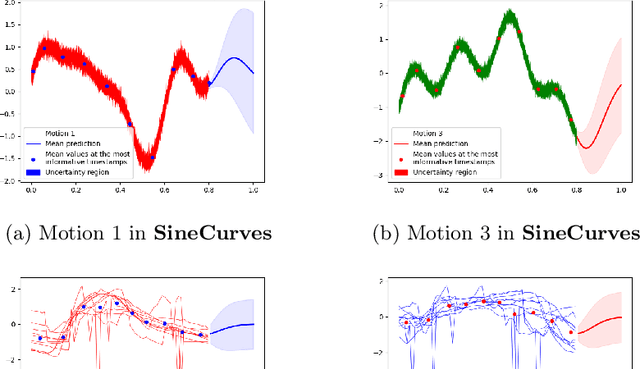

While time series classification and forecasting problems have been extensively studied, the cases of noisy time series data with arbitrary time sequence lengths have remained challenging. Each time series instance can be thought of as a sample realization of a noisy dynamical model, which is characterized by a continuous stochastic process. For many applications, the data are mixed and consist of several types of noisy time series sequences modeled by multiple stochastic processes, making the forecasting and classification tasks even more challenging. Instead of regressing data naively and individually to each time series type, we take a latent variable model approach using a mixtured Gaussian processes with learned spectral kernels. More specifically, we auto-assign each type of noisy time series data a signature vector called its motion code. Then, conditioned on each assigned motion code, we infer a sparse approximation of the corresponding time series using the concept of the most informative timestamps. Our unmixing classification approach involves maximizing the likelihood across all the mixed noisy time series sequences of varying lengths. This stochastic approach allows us to learn not only within a single type of noisy time series data but also across many underlying stochastic processes, giving us a way to learn multiple dynamical models in an integrated and robust manner. The different learned latent stochastic models allow us to generate specific sub-type forecasting. We provide several quantitative comparisons demonstrating the performance of our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge