Robust Hypothesis Test for Nonlinear Effect with Gaussian Processes

Paper and Code

Oct 27, 2017

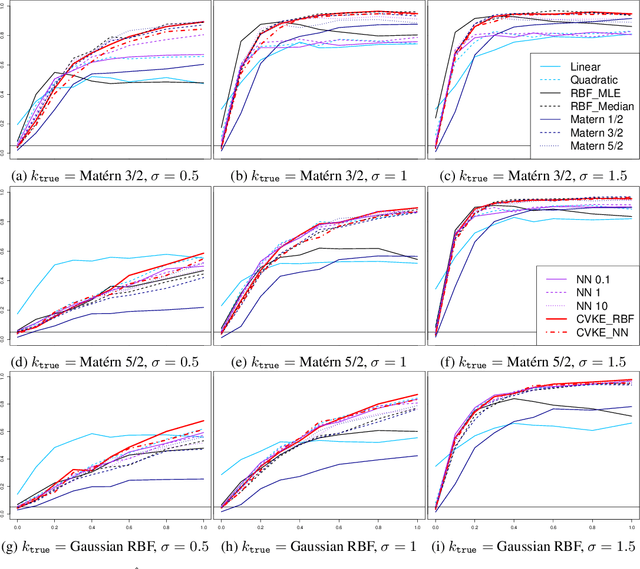

This work constructs a hypothesis test for detecting whether an data-generating function $h: R^p \rightarrow R$ belongs to a specific reproducing kernel Hilbert space $\mathcal{H}_0$ , where the structure of $\mathcal{H}_0$ is only partially known. Utilizing the theory of reproducing kernels, we reduce this hypothesis to a simple one-sided score test for a scalar parameter, develop a testing procedure that is robust against the mis-specification of kernel functions, and also propose an ensemble-based estimator for the null model to guarantee test performance in small samples. To demonstrate the utility of the proposed method, we apply our test to the problem of detecting nonlinear interaction between groups of continuous features. We evaluate the finite-sample performance of our test under different data-generating functions and estimation strategies for the null model. Our results reveal interesting connections between notions in machine learning (model underfit/overfit) and those in statistical inference (i.e. Type I error/power of hypothesis test), and also highlight unexpected consequences of common model estimating strategies (e.g. estimating kernel hyperparameters using maximum likelihood estimation) on model inference.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge