Robust Face Recognition with Deeply Normalized Depth Images

Paper and Code

May 01, 2018

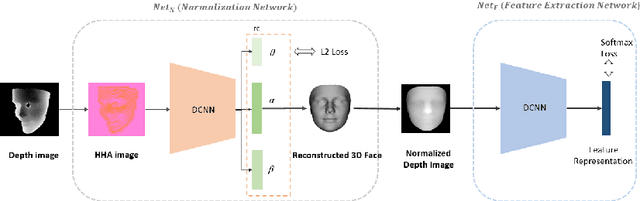

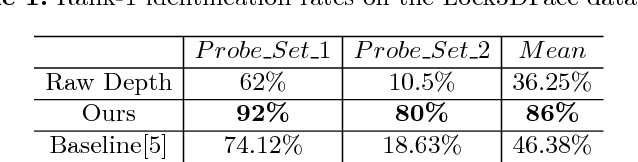

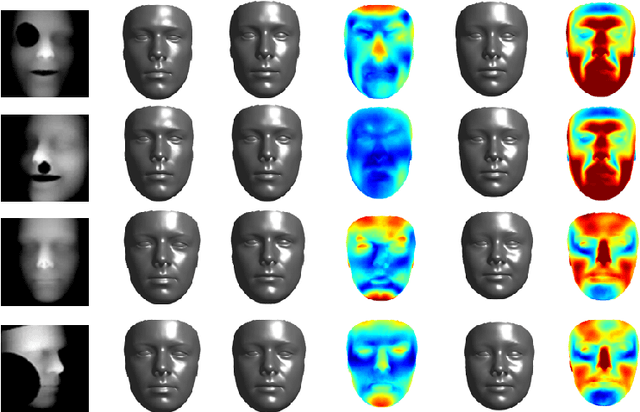

Depth information has been proven useful for face recognition. However, existing depth-image-based face recognition methods still suffer from noisy depth values and varying poses and expressions. In this paper, we propose a novel method for normalizing facial depth images to frontal pose and neutral expression and extracting robust features from the normalized depth images. The method is implemented via two deep convolutional neural networks (DCNN), normalization network ($Net_{N}$) and feature extraction network ($Net_{F}$). Given a facial depth image, $Net_{N}$ first converts it to an HHA image, from which the 3D face is reconstructed via a DCNN. $Net_{N}$ then generates a pose-and-expression normalized (PEN) depth image from the reconstructed 3D face. The PEN depth image is finally passed to $Net_{F}$, which extracts a robust feature representation via another DCNN for face recognition. Our preliminary evaluation results demonstrate the superiority of the proposed method in recognizing faces of arbitrary poses and expressions with depth images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge