RL-MUL: Multiplier Design Optimization with Deep Reinforcement Learning

Paper and Code

Mar 31, 2024

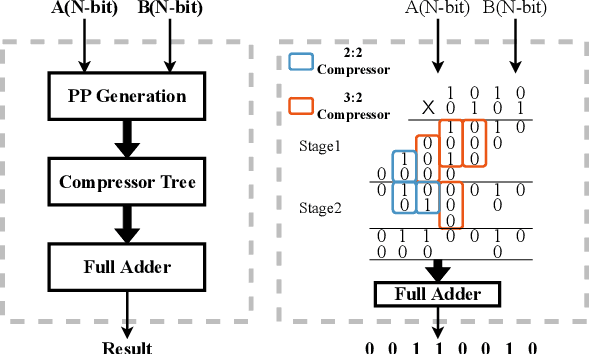

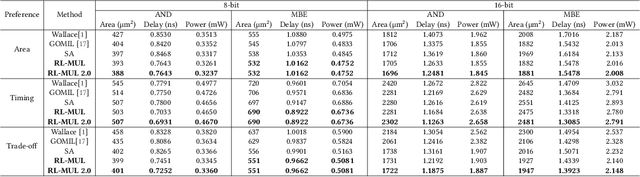

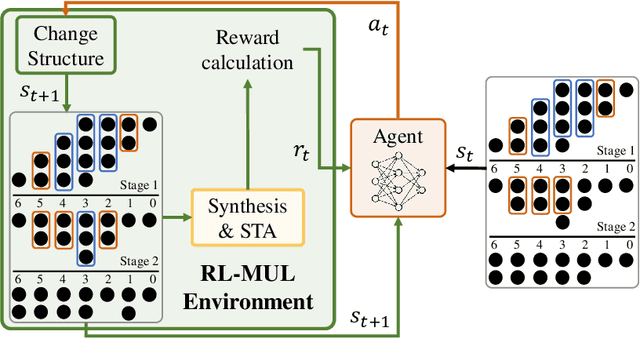

Multiplication is a fundamental operation in many applications, and multipliers are widely adopted in various circuits. However, optimizing multipliers is challenging and non-trivial due to the huge design space. In this paper, we propose RL-MUL, a multiplier design optimization framework based on reinforcement learning. Specifically, we utilize matrix and tensor representations for the compressor tree of a multiplier, based on which the convolutional neural networks can be seamlessly incorporated as the agent network. The agent can learn to optimize the multiplier structure based on a Pareto-driven reward which is customized to accommodate the trade-off between area and delay. Additionally, the capability of RL-MUL is extended to optimize the fused multiply-accumulator (MAC) designs. Experiments are conducted on different bit widths of multipliers. The results demonstrate that the multipliers produced by RL-MUL can dominate all baseline designs in terms of area and delay. The performance gain of RL-MUL is further validated by comparing the area and delay of processing element arrays using multipliers from RL-MUL and baseline approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge