Revisiting Saliency Metrics: Farthest-Neighbor Area Under Curve

Paper and Code

Feb 24, 2020

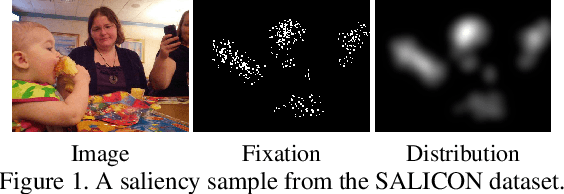

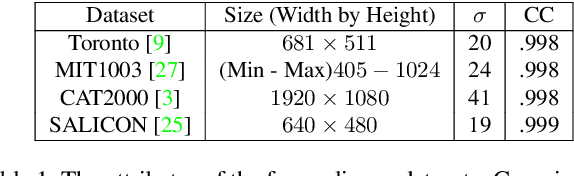

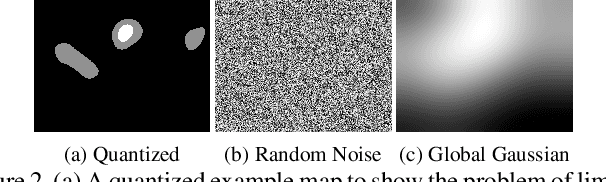

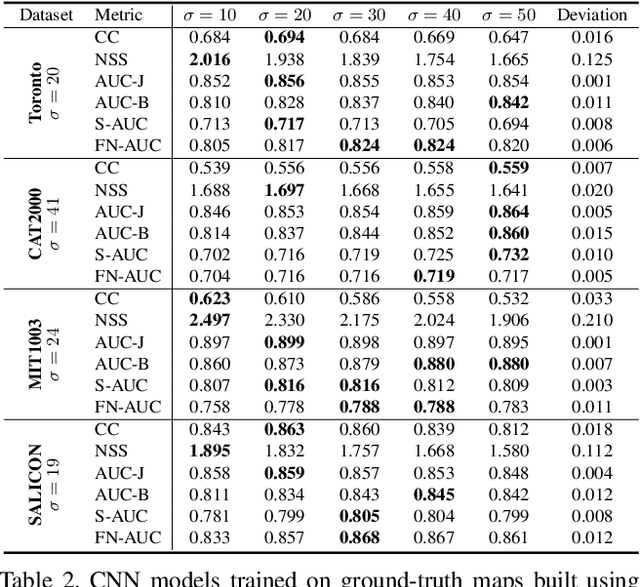

Saliency detection has been widely studied because it plays an important role in various vision applications, but it is difficult to evaluate saliency systems because each measure has its own bias. In this paper, we first revisit the problem of applying the widely used saliency metrics on modern Convolutional Neural Networks(CNNs). Our investigation shows the saliency datasets have been built based on different choices of parameters and CNNs are designed to fit a dataset-specific distribution. Secondly, we show that the Shuffled Area Under Curve(S-AUC) metric still suffers from spatial biases. We propose a new saliency metric based on the AUC property, which aims at sampling a more directional negative set for evaluation, denoted as Farthest-Neighbor AUC(FN-AUC). We also propose a strategy to measure the quality of the sampled negative set. Our experiment shows FN-AUC can measure spatial biases, central and peripheral, more effectively than S-AUC without penalizing the fixation locations. Thirdly, we propose a global smoothing function to overcome the problem of few value degrees (output quantization) in computing AUC metrics. Comparing with random noise, our smooth function can create unique values without losing the relative saliency relationship.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge