Revisiting Methods for Finding Influential Examples

Paper and Code

Nov 08, 2021

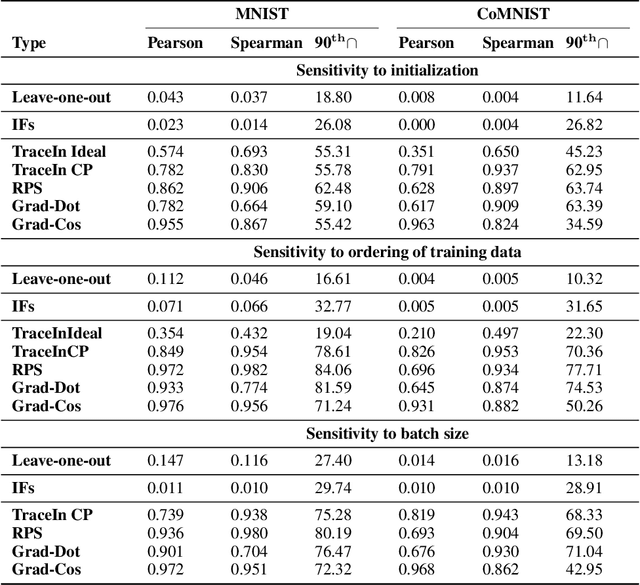

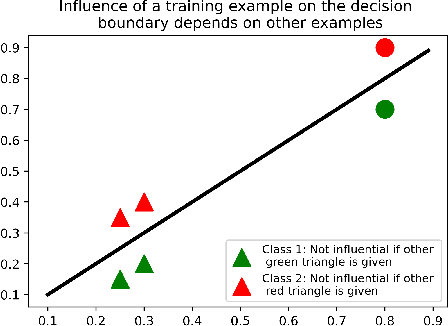

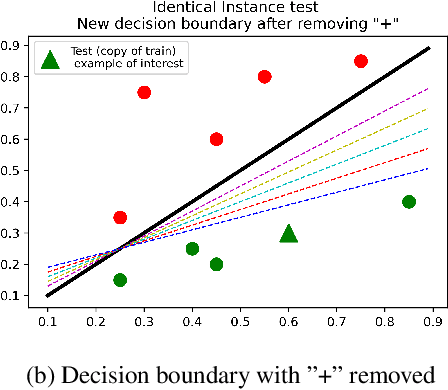

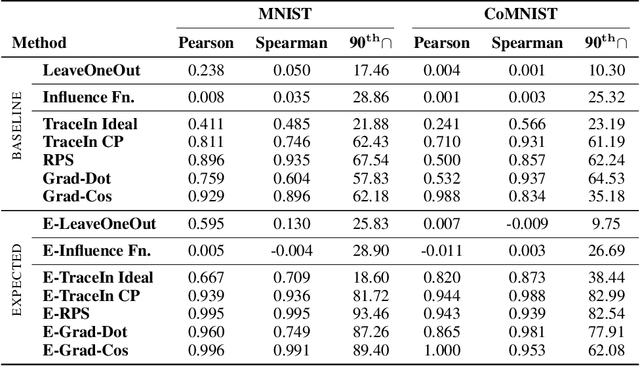

Several instance-based explainability methods for finding influential training examples for test-time decisions have been proposed recently, including Influence Functions, TraceIn, Representer Point Selection, Grad-Dot, and Grad-Cos. Typically these methods are evaluated using LOO influence (Cook's distance) as a gold standard, or using various heuristics. In this paper, we show that all of the above methods are unstable, i.e., extremely sensitive to initialization, ordering of the training data, and batch size. We suggest that this is a natural consequence of how in the literature, the influence of examples is assumed to be independent of model state and other examples -- and argue it is not. We show that LOO influence and heuristics are, as a result, poor metrics to measure the quality of instance-based explanations, and instead propose to evaluate such explanations by their ability to detect poisoning attacks. Further, we provide a simple, yet effective baseline to improve all of the above methods and show how it leads to very significant improvements on downstream tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge