Revisiting graph neural networks and distance encoding from a practical view

Paper and Code

Nov 29, 2020

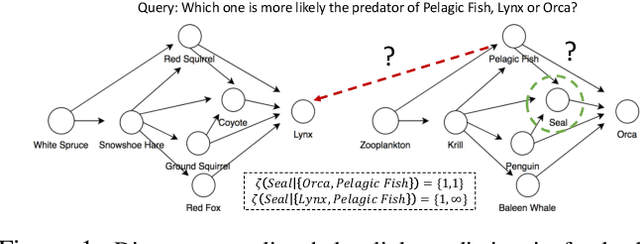

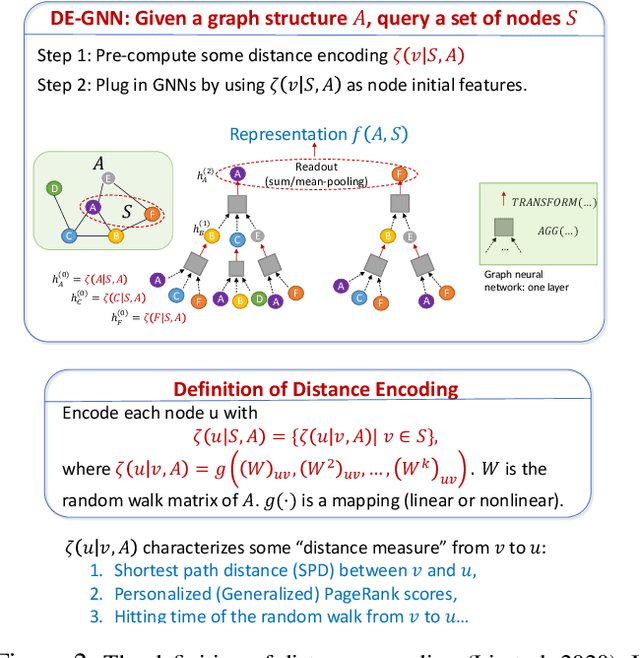

Graph neural networks (GNNs) are widely used in the applications based on graph structured data, such as node classification and link prediction. However, GNNs are often used as a black-box tool and rarely get in-depth investigated regarding whether they fit certain applications that may have various properties. A recently proposed technique distance encoding (DE) (Li et al. 2020) magically makes GNNs work well in many applications, including node classification and link prediction. The theory provided in (Li et al. 2020) supports DE by proving that DE improves the representation power of GNNs. However, it is not obvious how the theory assists the applications accordingly. Here, we revisit GNNs and DE from a more practical point of view. We want to explain how DE makes GNNs fit for node classification and link prediction. Specifically, for link prediction, DE can be viewed as a way to establish correlations between a pair of node representations. For node classification, the problem becomes more complicated as different classification tasks may hold node labels that indicate different physical meanings. We focus on the most widely-considered node classification scenarios and categorize the node labels into two types, community type and structure type, and then analyze different mechanisms that GNNs adopt to predict these two types of labels. We also run extensive experiments to compare eight different configurations of GNNs paired with DE to predict node labels over eight real-world graphs. The results demonstrate the uniform effectiveness of DE to predict structure-type labels. Lastly, we reach three pieces of conclusions on how to use GNNs and DE properly in tasks of node classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge