Rethinking the objectives of extractive question answering

Paper and Code

Aug 28, 2020

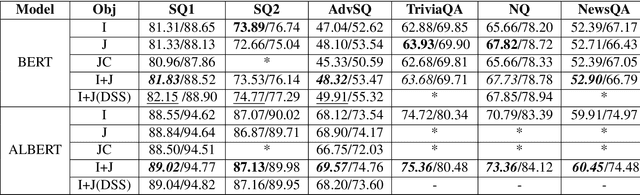

This paper describes two generally applicable approaches towards the significant improvement of the performance of state-of-the-art extractive question answering (EQA) systems. Firstly, contrary to a common belief, it demonstrates that using the objective with independence assumption for span probability $P(a_s,a_e) = P(a_s)P(a_e)$ of span starting at position $a_s$ and ending at position $a_e$ may have adverse effects. Therefore we propose a new compound objective that models joint probability $P(a_s,a_e)$ directly, while still keeping the objective with independency assumption as an auxiliary objective. Our second approach shows the beneficial effect of distantly semi-supervised shared-normalization objective known from (Clark and Gardner, 2017). We show that normalizing over a set of documents similar to the golden passage, and marginalizing over all ground-truth answer string positions leads to the improvement of results from smaller statistical models. Our results are supported via experiments with three QA models (BidAF, BERT, ALBERT) over six datasets. The proposed approaches do not use any additional data. Our code, analysis, pretrained models, and individual results will be available online.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge