Rethinking Self-Supervised Learning Within the Framework of Partial Information Decomposition

Paper and Code

Dec 03, 2024

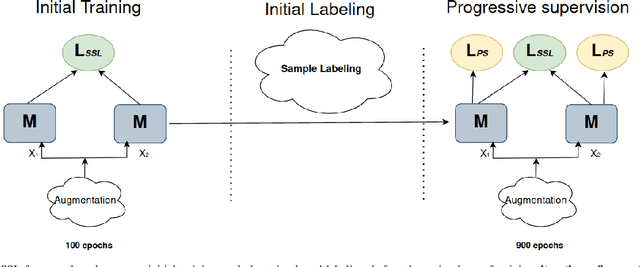

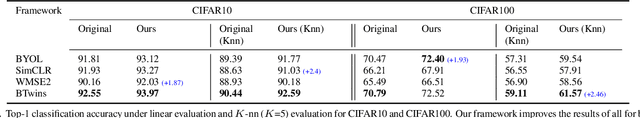

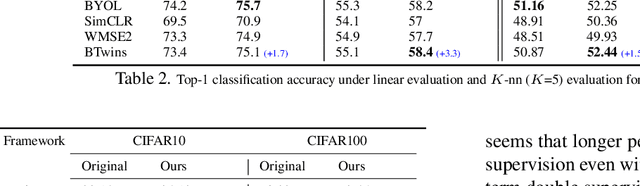

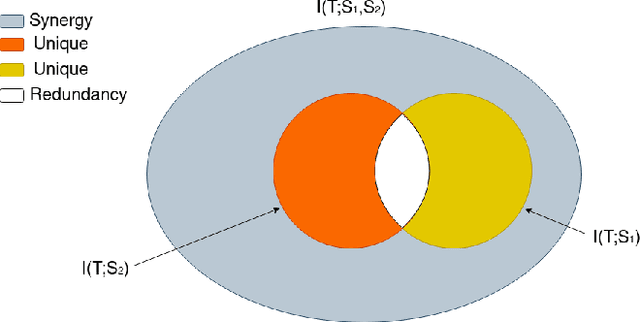

Self Supervised learning (SSL) has demonstrated its effectiveness in feature learning from unlabeled data. Regarding this success, there have been some arguments on the role that mutual information plays within the SSL framework. Some works argued for increasing mutual information between representation of augmented views. Others suggest decreasing mutual information between them, while increasing task-relevant information. We ponder upon this debate and propose to revisit the core idea of SSL within the framework of partial information decomposition (PID). Thus, with SSL under PID we propose to replace traditional mutual information with the more general concept of joint mutual information to resolve the argument. Our investigation on instantiation of SSL within the PID framework leads to upgrading the existing pipelines by considering the components of the PID in the SSL models for improved representation learning. Accordingly we propose a general pipeline that can be applied to improve existing baselines. Our pipeline focuses on extracting the unique information component under the PID to build upon lower level supervision for generic feature learning and on developing higher-level supervisory signals for task-related feature learning. In essence, this could be interpreted as a joint utilization of local and global clustering. Experiments on four baselines and four datasets show the effectiveness and generality of our approach in improving existing SSL frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge