Restricted Eigenvalue from Stable Rank with Applications to Sparse Linear Regression

Paper and Code

Feb 17, 2018

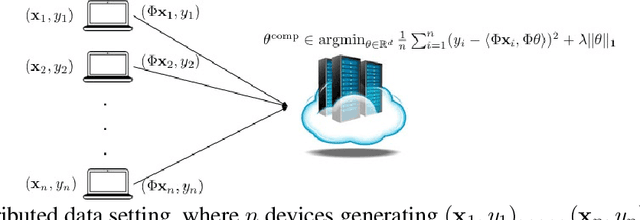

High-dimensional settings, where the data dimension ($d$) far exceeds the number of observations ($n$), are common in many statistical and machine learning applications. Methods based on $\ell_1$-relaxation, such as Lasso, are very popular for sparse recovery in these settings. Restricted Eigenvalue (RE) condition is among the weakest, and hence the most general, condition in literature imposed on the Gram matrix that guarantees nice statistical properties for the Lasso estimator. It is natural to ask: what families of matrices satisfy the RE condition? Following a line of work in this area, we construct a new broad ensemble of dependent random design matrices that have an explicit RE bound. Our construction starts with a fixed (deterministic) matrix $X \in \mathbb{R}^{n \times d}$ satisfying a simple stable rank condition, and we show that a matrix drawn from the distribution $X \Phi^\top \Phi$, where $\Phi \in \mathbb{R}^{m \times d}$ is a subgaussian random matrix, with high probability, satisfies the RE condition. This construction allows incorporating a fixed matrix that has an easily {\em verifiable} condition into the design process, and allows for generation of {\em compressed} design matrices that have a lower storage requirement than a standard design matrix. We give two applications of this construction to sparse linear regression problems, including one to a compressed sparse regression setting where the regression algorithm only has access to a compressed representation of a fixed design matrix $X$.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge