Resolving Lexical Bias in Edit Scoping with Projector Editor Networks

Paper and Code

Aug 19, 2024

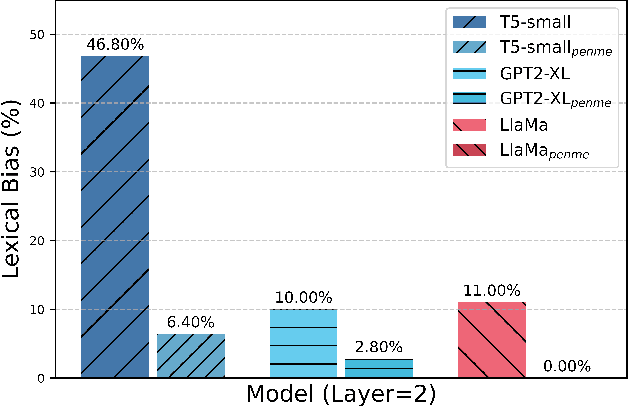

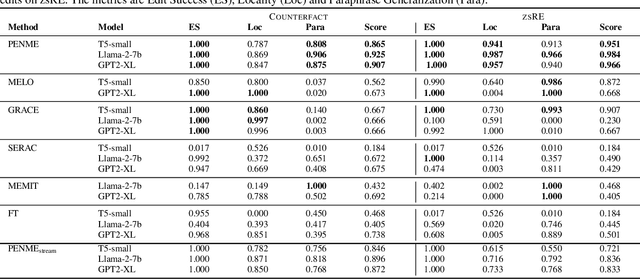

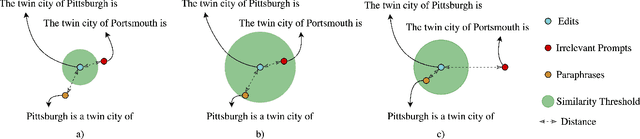

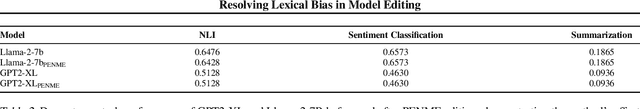

Weight-preserving model editing techniques heavily rely on the scoping mechanism that decides when to apply an edit to the base model. These scoping mechanisms utilize distance functions in the representation space to ascertain the scope of the edit. In this work, we show that distance-based scoping functions grapple with lexical biases leading to issues such as misfires with irrelevant prompts that share similar lexical characteristics. To address this problem, we introduce, Projector Editor Networks for Model Editing (PENME),is a model editing approach that employs a compact adapter with a projection network trained via a contrastive learning objective. We demonstrate the efficacy of PENME in achieving superior results while being compute efficient and flexible to adapt across model architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge