Resilience of Autonomous Vehicle Object Category Detection to Universal Adversarial Perturbations

Paper and Code

Jul 10, 2021

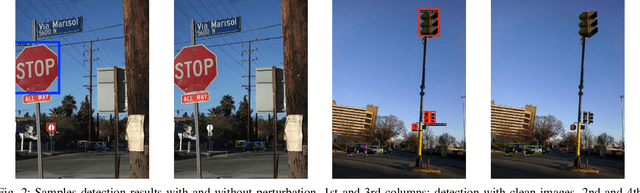

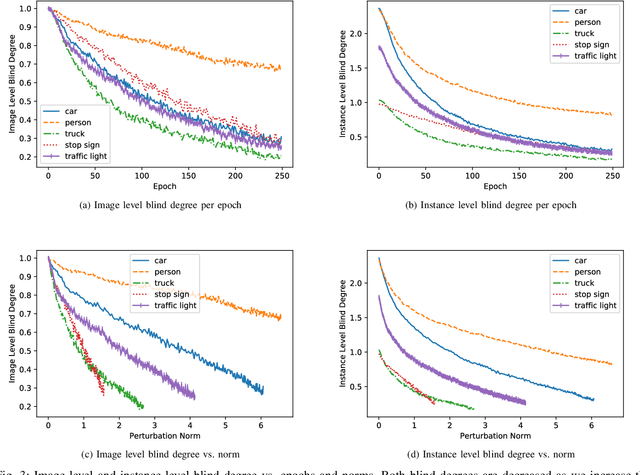

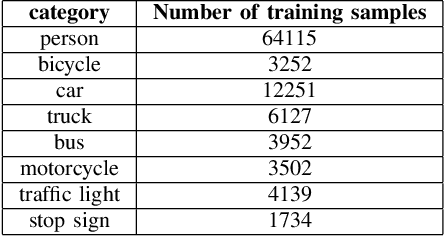

Due to the vulnerability of deep neural networks to adversarial examples, numerous works on adversarial attacks and defenses have been burgeoning over the past several years. However, there seem to be some conventional views regarding adversarial attacks and object detection approaches that most researchers take for granted. In this work, we bring a fresh perspective on those procedures by evaluating the impact of universal perturbations on object detection at a class-level. We apply it to a carefully curated data set related to autonomous driving. We use Faster-RCNN object detector on images of five different categories: person, car, truck, stop sign and traffic light from the COCO data set, while carefully perturbing the images using Universal Dense Object Suppression algorithm. Our results indicate that person, car, traffic light, truck and stop sign are resilient in that order (most to least) to universal perturbations. To the best of our knowledge, this is the first time such a ranking has been established which is significant for the security of the data sets pertaining to autonomous vehicles and object detection in general.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge