Residual Attention Based Network for Automatic Classification of Phonation Modes

Paper and Code

Jul 18, 2021

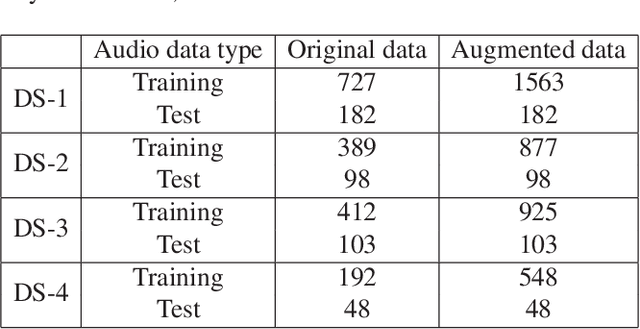

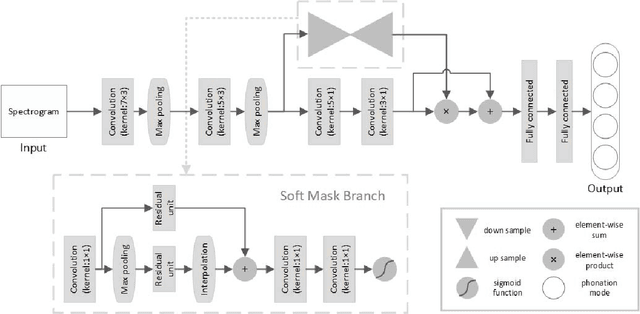

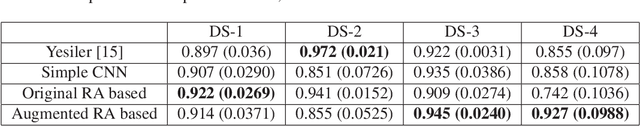

Phonation mode is an essential characteristic of singing style as well as an important expression of performance. It can be classified into four categories, called neutral, breathy, pressed and flow. Previous studies used voice quality features and feature engineering for classification. While deep learning has achieved significant progress in other fields of music information retrieval (MIR), there are few attempts in the classification of phonation modes. In this study, a Residual Attention based network is proposed for automatic classification of phonation modes. The network consists of a convolutional network performing feature processing and a soft mask branch enabling the network focus on a specific area. In comparison experiments, the models with proposed network achieve better results in three of the four datasets than previous works, among which the highest classification accuracy is 94.58%, 2.29% higher than the baseline.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge