Rescale-Invariant Federated Reinforcement Learning for Resource Allocation in V2X Networks

Paper and Code

May 03, 2024

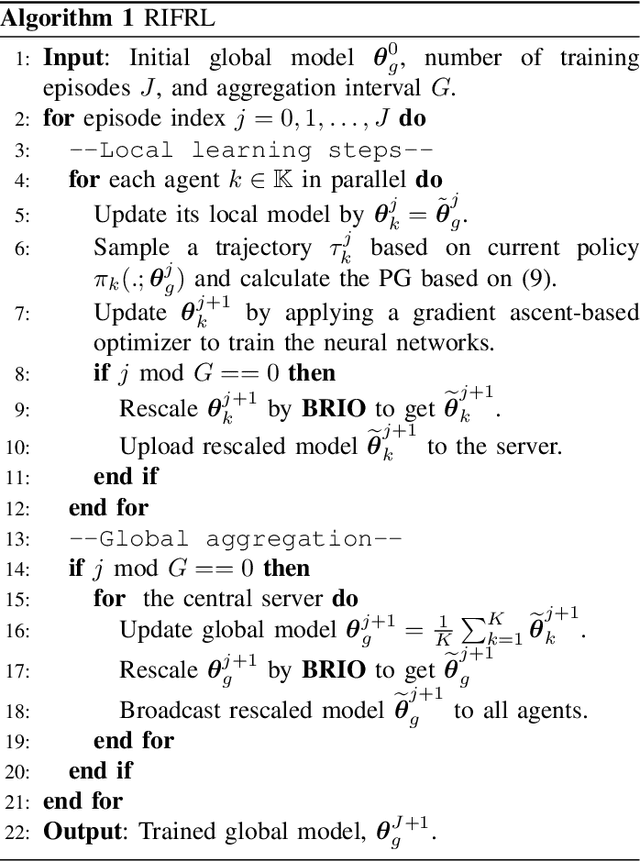

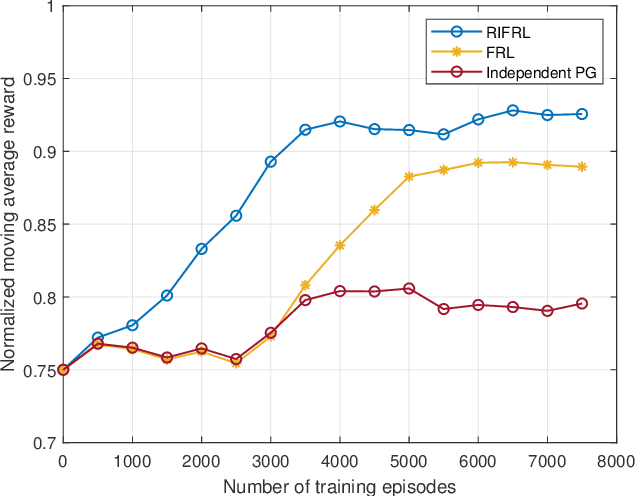

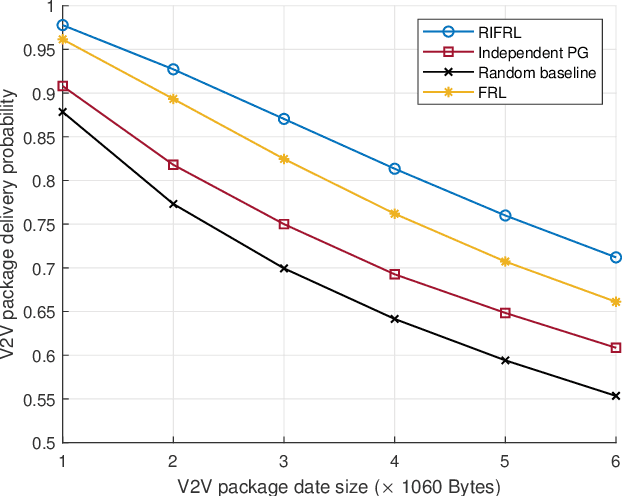

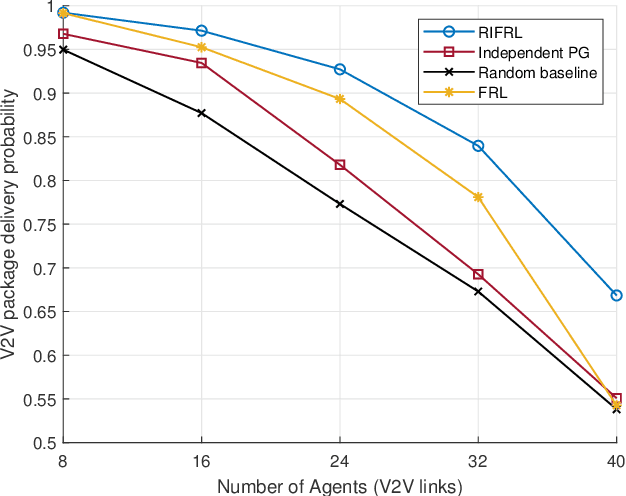

Federated Reinforcement Learning (FRL) offers a promising solution to various practical challenges in resource allocation for vehicle-to-everything (V2X) networks. However, the data discrepancy among individual agents can significantly degrade the performance of FRL-based algorithms. To address this limitation, we exploit the node-wise invariance property of ReLU-activated neural networks, with the aim of reducing data discrepancy to improve learning performance. Based on this property, we introduce a backward rescale-invariant operation to develop a rescale-invariant FRL algorithm. Simulation results demonstrate that the proposed algorithm notably enhances both convergence speed and convergent performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge