Representation Mixing for TTS Synthesis

Paper and Code

Nov 24, 2018

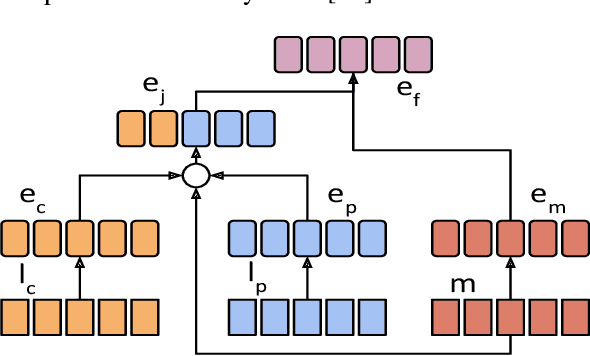

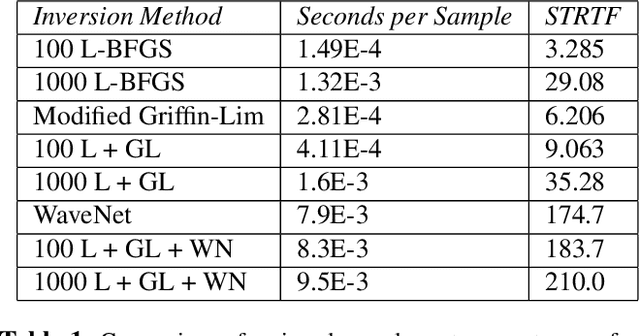

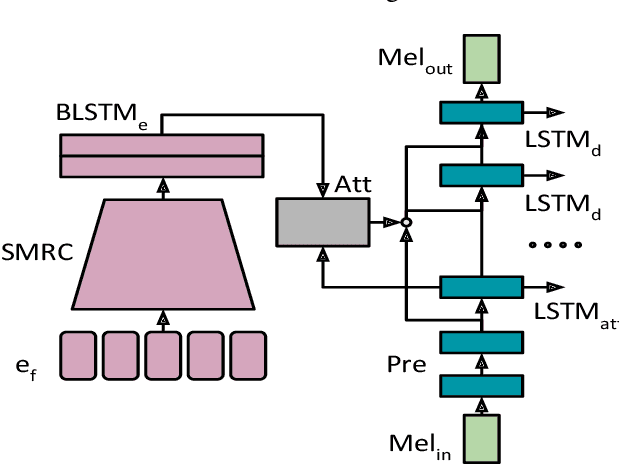

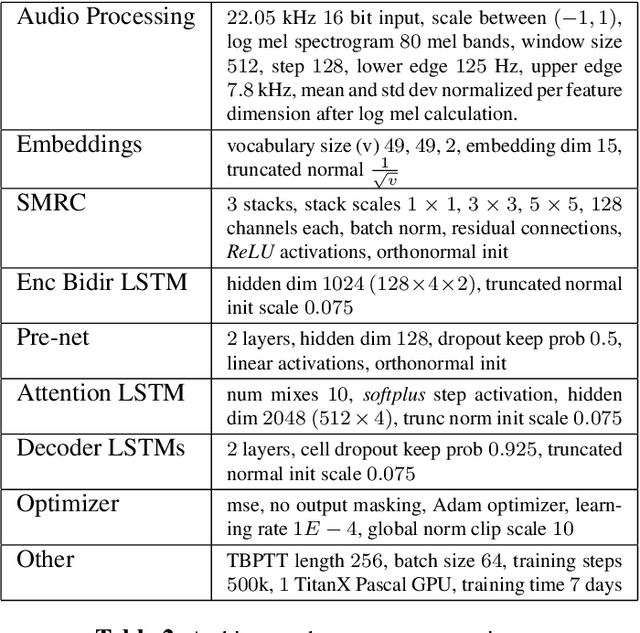

Recent character and phoneme-based parametric TTS systems using deep learning have shown strong performance in natural speech generation. However, the choice between character or phoneme input can create serious limitations for practical deployment, as direct control of pronunciation is crucial in certain cases. We demonstrate a simple method for combining multiple types of linguistic information in a single encoder, named representation mixing, enabling flexible choice between character, phoneme, or mixed representations during inference. Experiments and user studies on a public audiobook corpus show the efficacy of our approach.

* 5 pages, 3 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge