Representation Learning for Networks in Biology and Medicine: Advancements, Challenges, and Opportunities

Paper and Code

Apr 11, 2021

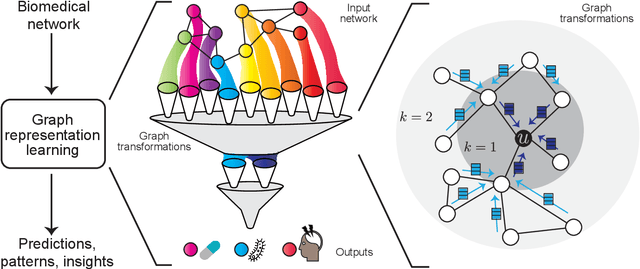

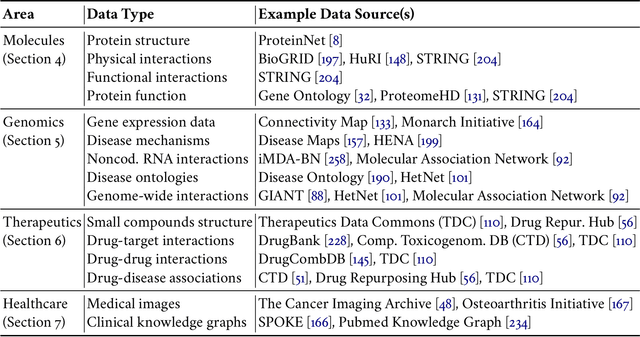

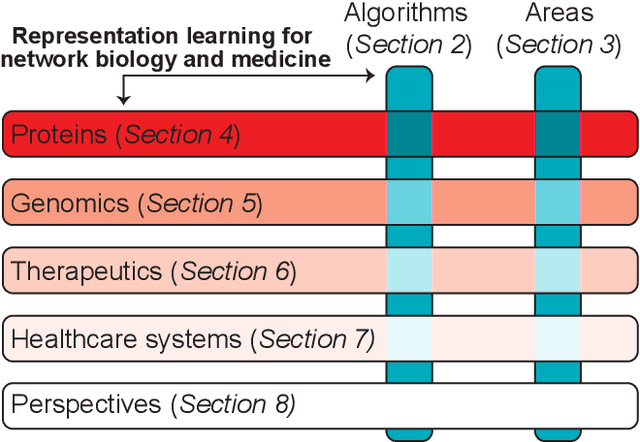

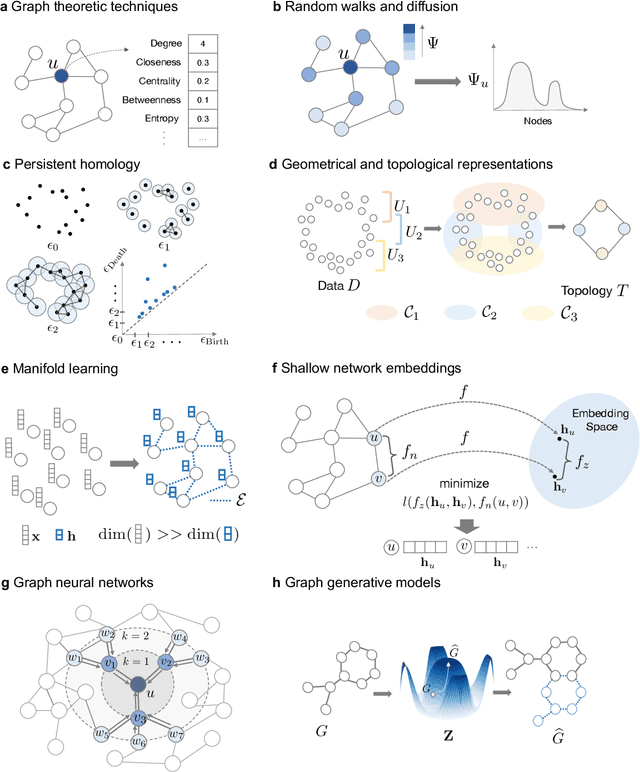

With the remarkable success of representation learning in providing powerful predictions and data insights, we have witnessed a rapid expansion of representation learning techniques into modeling, analysis, and learning with networks. Biomedical networks are universal descriptors of systems of interacting elements, from protein interactions to disease networks, all the way to healthcare systems and scientific knowledge. In this review, we put forward an observation that long-standing principles of network biology and medicine -- while often unspoken in machine learning research -- can provide the conceptual grounding for representation learning, explain its current successes and limitations, and inform future advances. We synthesize a spectrum of algorithmic approaches that, at their core, leverage topological features to embed networks into compact vector spaces. We also provide a taxonomy of biomedical areas that are likely to benefit most from algorithmic innovation. Representation learning techniques are becoming essential for identifying causal variants underlying complex traits, disentangling behaviors of single cells and their impact on health, and diagnosing and treating diseases with safe and effective medicines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge