Representation Learning and Identity Adversarial Training for Facial Behavior Understanding

Paper and Code

Jul 15, 2024

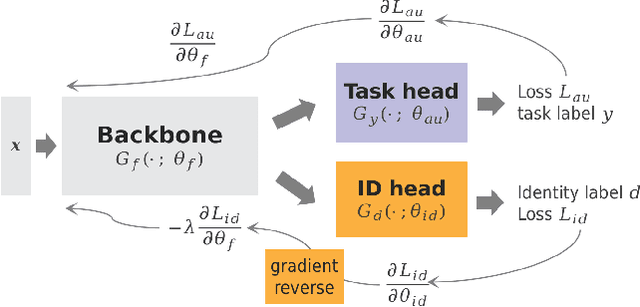

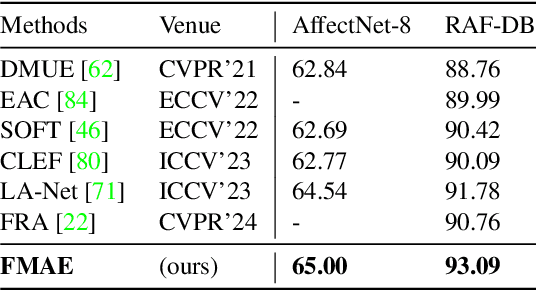

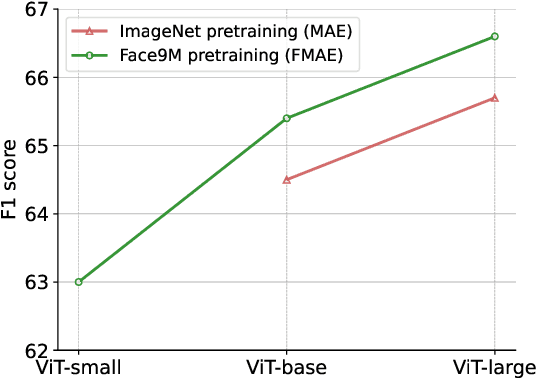

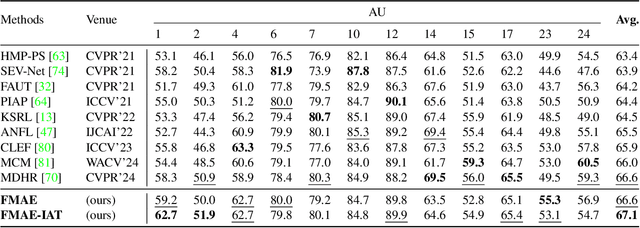

Facial Action Unit (AU) detection has gained significant research attention as AUs contain complex expression information. In this paper, we unpack two fundamental factors in AU detection: data and subject identity regularization, respectively. Motivated by recent advances in foundation models, we highlight the importance of data and collect a diverse dataset Face9M, comprising 9 million facial images, from multiple public resources. Pretraining a masked autoencoder on Face9M yields strong performance in AU detection and facial expression tasks. We then show that subject identity in AU datasets provides a shortcut learning for the model and leads to sub-optimal solutions to AU predictions. To tackle this generic issue of AU tasks, we propose Identity Adversarial Training (IAT) and demonstrate that a strong IAT regularization is necessary to learn identity-invariant features. Furthermore, we elucidate the design space of IAT and empirically show that IAT circumvents the identity shortcut learning and results in a better solution. Our proposed methods, Facial Masked Autoencoder (FMAE) and IAT, are simple, generic and effective. Remarkably, the proposed FMAE-IAT approach achieves new state-of-the-art F1 scores on BP4D (67.1\%), BP4D+ (66.8\%), and DISFA (70.1\%) databases, significantly outperforming previous work. We release the code and model at https://github.com/forever208/FMAE-IAT, the first open-sourced facial model pretrained on 9 million diverse images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge