Representation Based Complexity Measures for Predicting Generalization in Deep Learning

Paper and Code

Dec 04, 2020

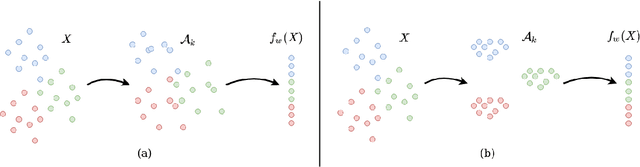

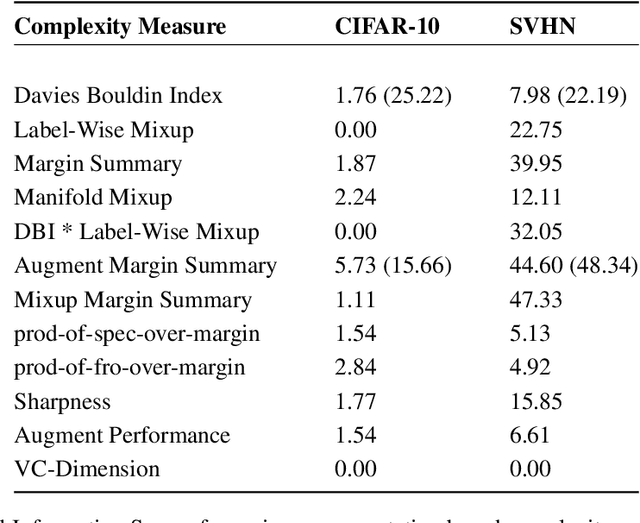

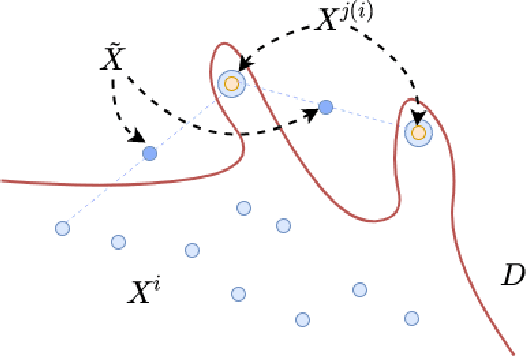

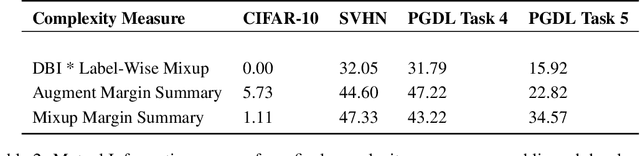

Deep Neural Networks can generalize despite being significantly overparametrized. Recent research has tried to examine this phenomenon from various view points and to provide bounds on the generalization error or measures predictive of the generalization gap based on these viewpoints, such as norm-based, PAC-Bayes based, and margin-based analysis. In this work, we provide an interpretation of generalization from the perspective of quality of internal representations of deep neural networks, based on neuroscientific theories of how the human visual system creates invariant and untangled object representations. Instead of providing theoretical bounds, we demonstrate practical complexity measures which can be computed ad-hoc to uncover generalization behaviour in deep models. We also provide a detailed description of our solution that won the NeurIPS competition on Predicting Generalization in Deep Learning held at NeurIPS 2020. An implementation of our solution is available at https://github.com/parthnatekar/pgdl.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge