REMuS-GNN: A Rotation-Equivariant Model for Simulating Continuum Dynamics

Paper and Code

May 05, 2022

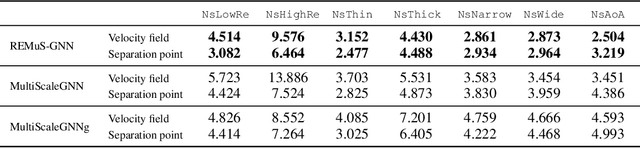

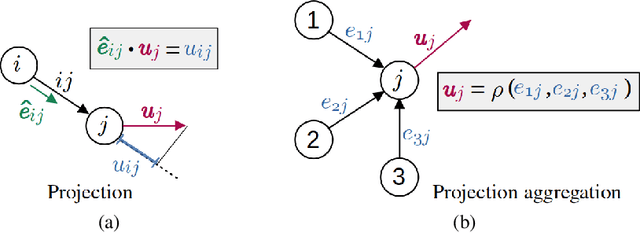

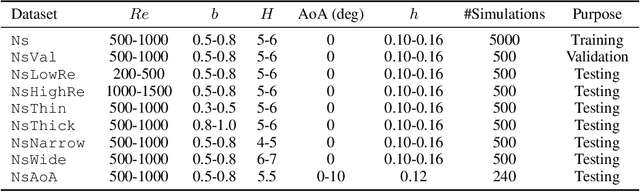

Numerical simulation is an essential tool in many areas of science and engineering, but its performance often limits application in practice or when used to explore large parameter spaces. On the other hand, surrogate deep learning models, while accelerating simulations, often exhibit poor accuracy and ability to generalise. In order to improve these two factors, we introduce REMuS-GNN, a rotation-equivariant multi-scale model for simulating continuum dynamical systems encompassing a range of length scales. REMuS-GNN is designed to predict an output vector field from an input vector field on a physical domain discretised into an unstructured set of nodes. Equivariance to rotations of the domain is a desirable inductive bias that allows the network to learn the underlying physics more efficiently, leading to improved accuracy and generalisation compared with similar architectures that lack such symmetry. We demonstrate and evaluate this method on the incompressible flow around elliptical cylinders.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge