Reliable Identification of Redundant Kernels for Convolutional Neural Network Compression

Paper and Code

Dec 10, 2018

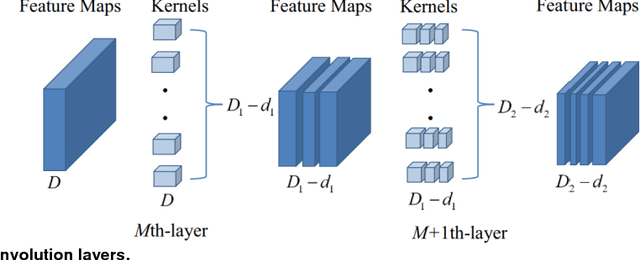

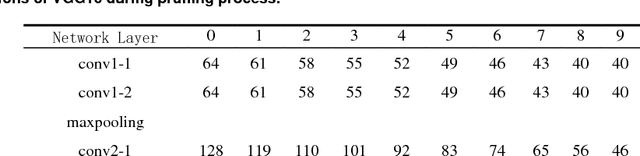

To compress deep convolutional neural networks (CNNs) with large memory footprint and long inference time, this paper proposes a novel pruning criterion using layer-wised Ln-norm of feature maps. Different from existing pruning criteria, which are mainly based on L1-norm of convolution kernels, the proposed method utilizes Ln-norm of output feature maps after non-linear activations, where n is a variable, increasing from 1 at the first convolution layer to inf at the last convolution layer. With the ability of accurately identifying unimportant convolution kernels, the proposed method achieves a good balance between model size and inference accuracy. The experiments on ImageNet and the successful application in railway surveillance system show that the proposed method outperforms existing kernel-norm-based methods and is generally applicable to any deep neural network with convolution operations.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge