Relational world knowledge representation in contextual language models: A review

Paper and Code

Apr 12, 2021

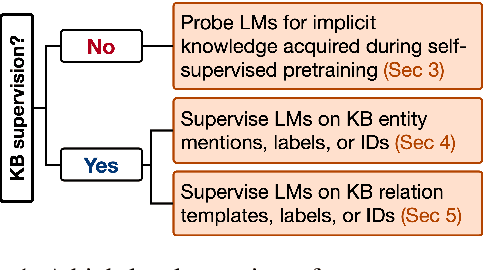

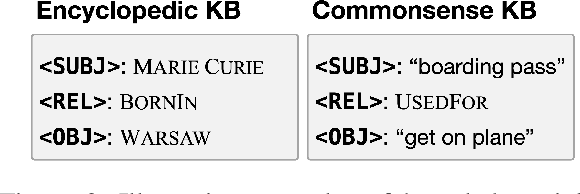

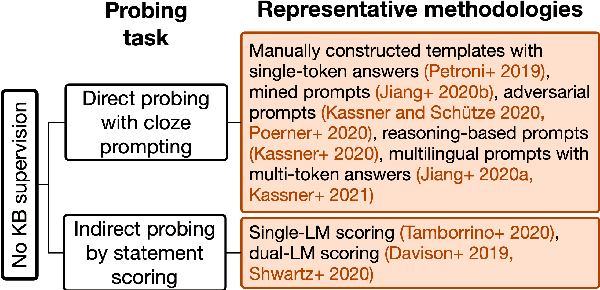

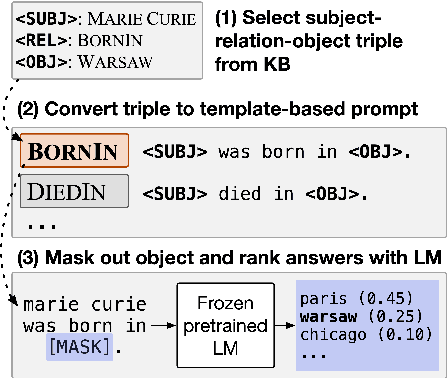

Relational knowledge bases (KBs) are established tools for world knowledge representation in machines. While they are advantageous for their precision and interpretability, they usually sacrifice some data modeling flexibility for these advantages because they adhere to a manually engineered schema. In this review, we take a natural language processing perspective to the limitations of KBs, examining how they may be addressed in part by training neural contextual language models (LMs) to internalize and express relational knowledge in free-text form. We propose a novel taxonomy for relational knowledge representation in contextual LMs based on the level of KB supervision provided, considering both works that probe LMs for implicit relational knowledge acquired during self-supervised pretraining on unstructured text alone, and works that explicitly supervise LMs at the level of KB entities and/or relations. We conclude that LMs and KBs are complementary representation tools, as KBs provide a high standard of factual precision which can in turn be flexibly and expressively modeled by LMs, and provide suggestions for future research in this direction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge