Reinforcement Learning for Legged Robots: Motion Imitation from Model-Based Optimal Control

Paper and Code

May 18, 2023

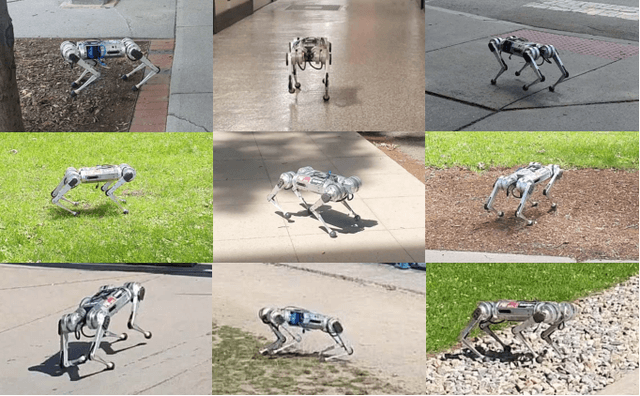

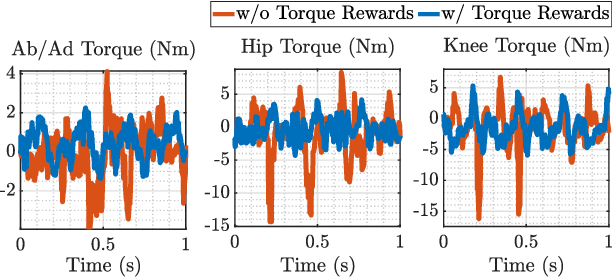

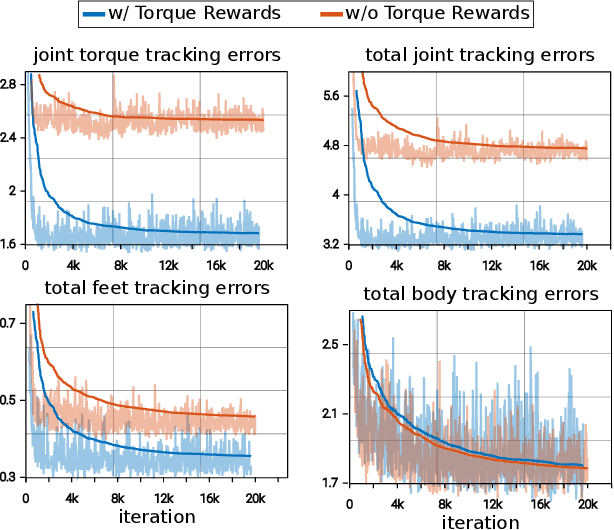

We propose MIMOC: Motion Imitation from Model-Based Optimal Control. MIMOC is a Reinforcement Learning (RL) controller that learns agile locomotion by imitating reference trajectories from model-based optimal control. MIMOC mitigates challenges faced by other motion imitation RL approaches because the references are dynamically consistent, require no motion retargeting, and include torque references. Hence, MIMOC does not require fine-tuning. MIMOC is also less sensitive to modeling and state estimation inaccuracies than model-based controllers. We validate MIMOC on the Mini-Cheetah in outdoor environments over a wide variety of challenging terrain, and on the MIT Humanoid in simulation. We show cases where MIMOC outperforms model-based optimal controllers, and show that imitating torque references improves the policy's performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge