Regularized K-means through hard-thresholding

Paper and Code

Oct 02, 2020

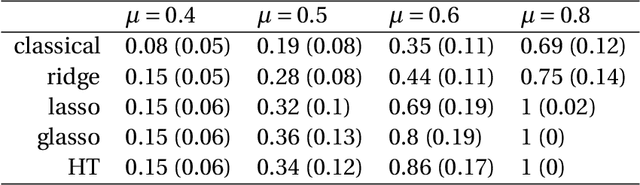

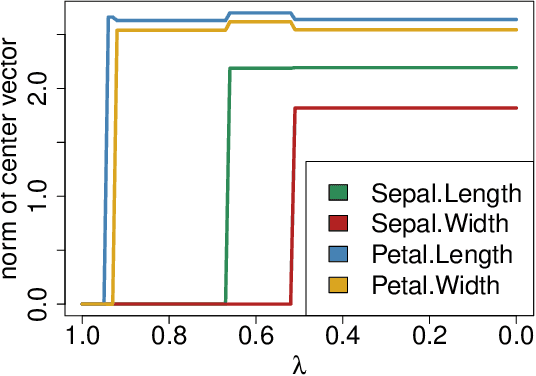

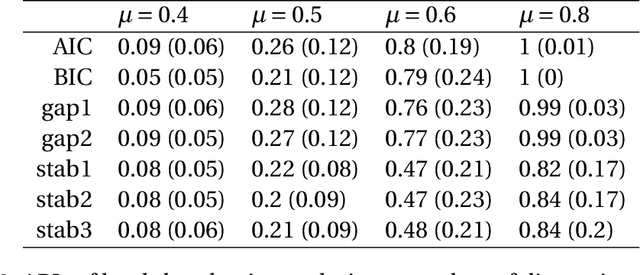

We study a framework of regularized $K$-means methods based on direct penalization of the size of the cluster centers. Different penalization strategies are considered and compared through simulation and theoretical analysis. Based on the results, we propose HT $K$-means, which uses an $\ell_0$ penalty to induce sparsity in the variables. Different techniques for selecting the tuning parameter are discussed and compared. The proposed method stacks up favorably with the most popular regularized $K$-means methods in an extensive simulation study. Finally, HT $K$-means is applied to several real data examples. Graphical displays are presented and used in these examples to gain more insight into the datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge