RefineLoc: Iterative Refinement for Weakly-Supervised Action Localization

Paper and Code

Mar 30, 2019

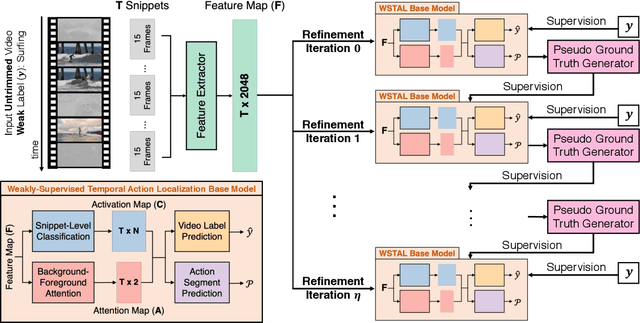

Video action detectors are usually trained using video datasets with fully supervised temporal annotations. Building such video datasets is a heavily expensive task. To alleviate this problem, recent algorithms leverage weak labelling where videos are untrimmed and only a video-level label is available. In this paper, we propose RefineLoc, a new method for weakly-supervised temporal action localization. RefineLoc uses an iterative refinement approach by estimating and training on snippet-level pseudo ground truth at every iteration. We show the benefit of using such an iterative approach and present an extensive analysis of different pseudo ground truth generators. We show the effectiveness of our model on two standard action datasets, ActivityNet v1.2 and THUMOS14. RefineLoc equipped with a segment prediction-based pseudo ground truth generator improves the state-of-the-art in weakly-supervised temporal localization on the challenging and large-scale ActivityNet dataset by 4.2% and achieves comparable performance with state-of-the-art on THUMOS14.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge