Recurrent Submodular Welfare and Matroid Blocking Bandits

Paper and Code

Feb 28, 2021

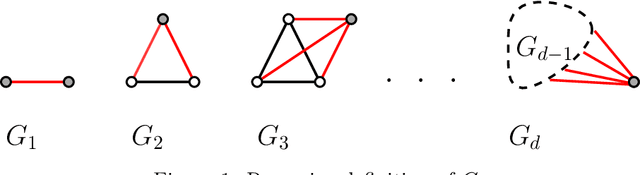

A recent line of research focuses on the study of the stochastic multi-armed bandits problem (MAB), in the case where temporal correlations of specific structure are imposed between the player's actions and the reward distributions of the arms (Kleinberg and Immorlica [FOCS18], Basu et al. [NeurIPS19]). As opposed to the standard MAB setting, where the optimal solution in hindsight can be trivially characterized, these correlations lead to (sub-)optimal solutions that exhibit interesting dynamical patterns -- a phenomenon that yields new challenges both from an algorithmic as well as a learning perspective. In this work, we extend the above direction to a combinatorial bandit setting and study a variant of stochastic MAB, where arms are subject to matroid constraints and each arm becomes unavailable (blocked) for a fixed number of rounds after each play. A natural common generalization of the state-of-the-art for blocking bandits, and that for matroid bandits, yields a $(1-\frac{1}{e})$-approximation for partition matroids, yet it only guarantees a $\frac{1}{2}$-approximation for general matroids. In this paper we develop new algorithmic ideas that allow us to obtain a polynomial-time $(1 - \frac{1}{e})$-approximation algorithm (asymptotically and in expectation) for any matroid, and thus to control the $(1-\frac{1}{e})$-approximate regret. A key ingredient is the technique of correlated (interleaved) scheduling. Along the way, we discover an interesting connection to a variant of Submodular Welfare Maximization, for which we provide (asymptotically) matching upper and lower approximability bounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge