Recurrent Graph Tensor Networks

Paper and Code

Oct 17, 2020

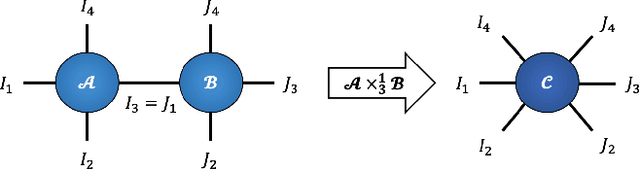

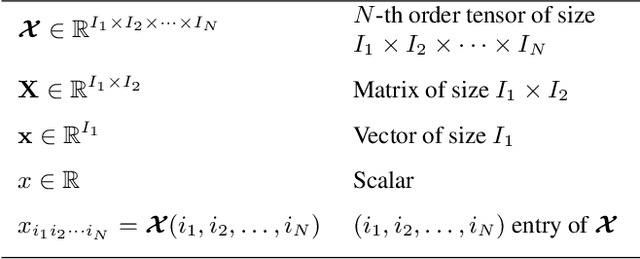

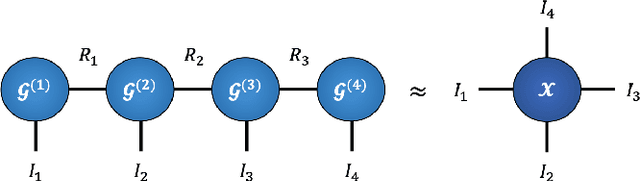

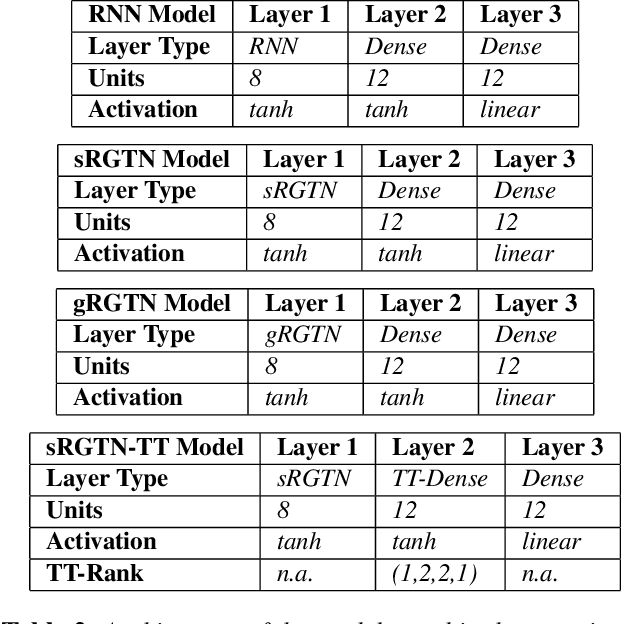

Recurrent Neural Networks (RNNs) are among the most successful machine learning models for sequence modelling. In this paper, we show that the modelling of hidden states in RNNs can be approximated through a multi-linear graph filter, which describes the directional flow of temporal information. The so derived multi-linear graph filter is then generalized to a tensor network form to improve its modelling power, resulting in a novel Recurrent Graph Tensor Network (RGTN). To validate the expressive power of the derived network, several variants of RGTN models were proposed and employed for the task of time-series forecasting, demonstrating superior properties in terms of convergence, performance, and complexity. By leveraging the multi-modal nature of tensor networks, RGTN models were shown to out-perform a standard RNN by 23% in terms of mean-squared-error while using up to 86% less parameters. Therefore, by combining the expressive power of tensor networks with a suitable graph filter, we show that the proposed RGTN models can out-perform a classical RNN at a drastically lower parameter complexity, especially in the multi-modal setting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge