Recovering from Biased Data: Can Fairness Constraints Improve Accuracy?

Paper and Code

Dec 02, 2019

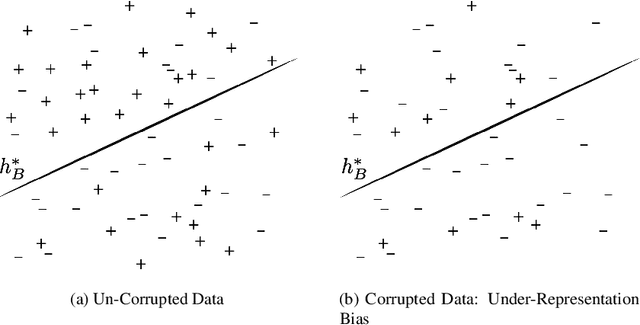

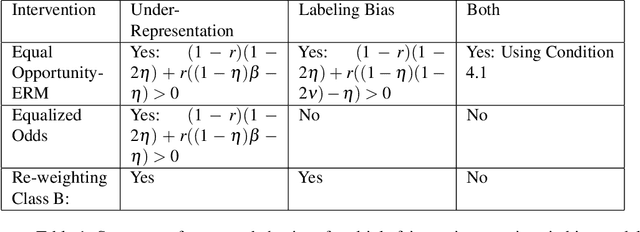

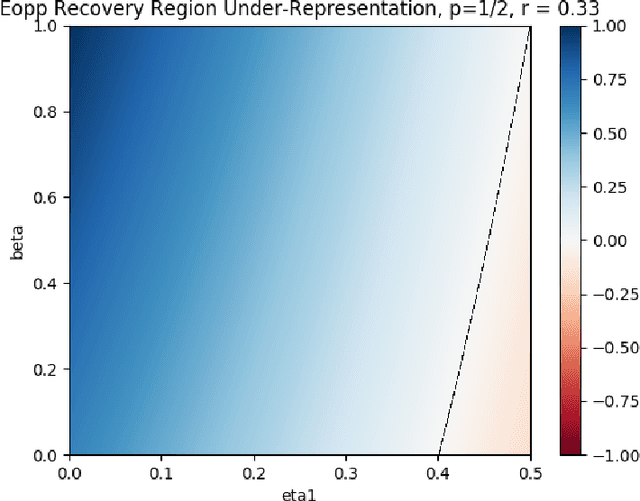

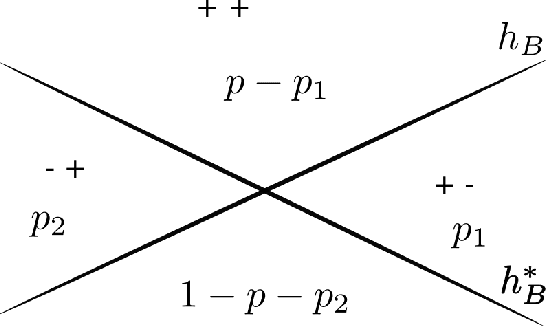

Multiple fairness constraints have been proposed in the literature, motivated by a range of concerns about how demographic groups might be treated unfairly by machine learning classifiers. In this work we consider a different motivation; learning from biased training data. We posit several ways in which training data may be biased, including having a more noisy or negatively biased labeling process on members of a disadvantaged group, or a decreased prevalence of positive or negative examples from the disadvantaged group, or both. Given such biased training data, Empirical Risk Minimization (ERM) may produce a classifier that not only is biased but also has suboptimal accuracy on the true data distribution. We examine the ability of fairness-constrained ERM to correct this problem. In particular, we find that the Equal Opportunity fairness constraint (Hardt, Price, and Srebro 2016) combined with ERM will provably recover the Bayes Optimal Classifier under a range of bias models. We also consider other recovery methods including reweighting the training data, Equalized Odds, and Demographic Parity. These theoretical results provide additional motivation for considering fairness interventions even if an actor cares primarily about accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge