Reconciling saliency and object center-bias hypotheses in explaining free-viewing fixations

Paper and Code

Mar 30, 2015

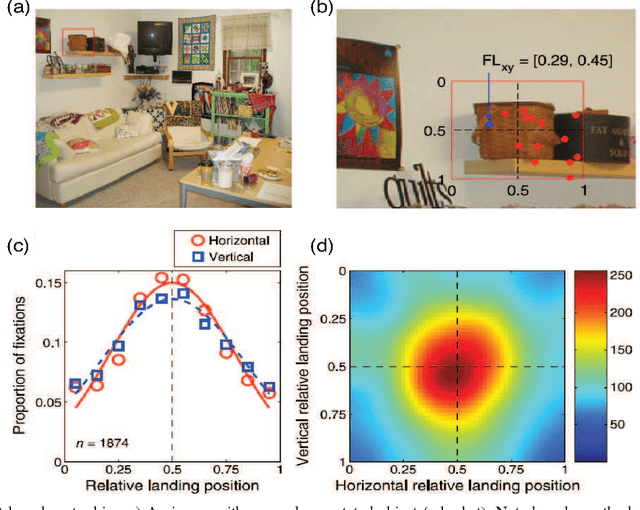

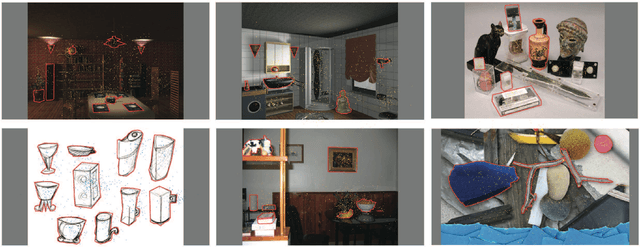

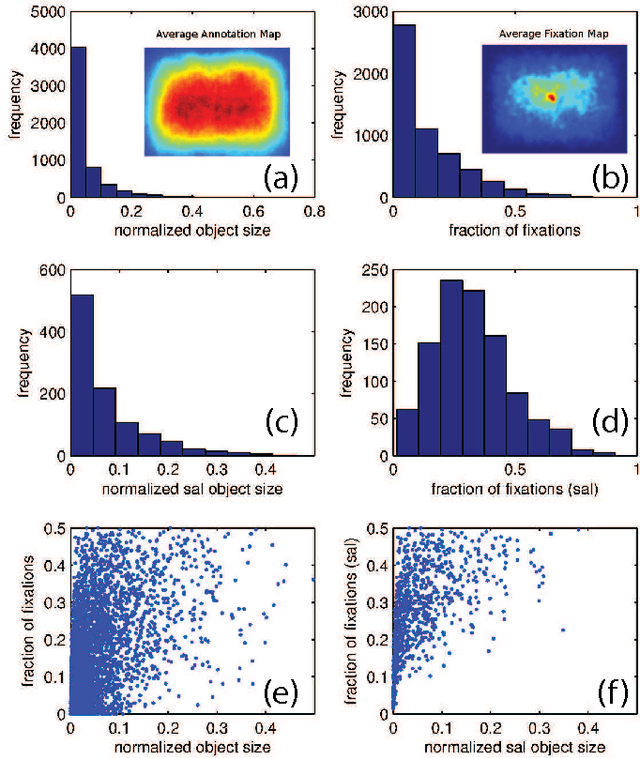

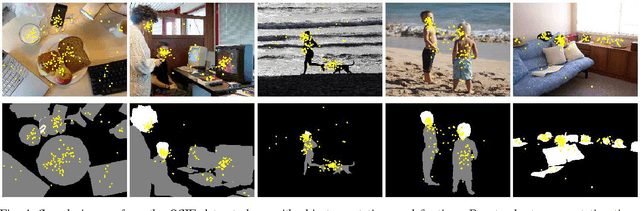

Predicting where people look in natural scenes has attracted a lot of interest in computer vision and computational neuroscience over the past two decades. Two seemingly contrasting categories of cues have been proposed to influence where people look: \textit{low-level image saliency} and \textit{high-level semantic information}. Our first contribution is to take a detailed look at these cues to confirm the hypothesis proposed by Henderson~\cite{henderson1993eye} and Nuthmann \& Henderson~\cite{nuthmann2010object} that observers tend to look at the center of objects. We analyzed fixation data for scene free-viewing over 17 observers on 60 fully annotated images with various types of objects. Images contained different types of scenes, such as natural scenes, line drawings, and 3D rendered scenes. Our second contribution is to propose a simple combined model of low-level saliency and object center-bias that outperforms each individual component significantly over our data, as well as on the OSIE dataset by Xu et al.~\cite{xu2014predicting}. The results reconcile saliency with object center-bias hypotheses and highlight that both types of cues are important in guiding fixations. Our work opens new directions to understand strategies that humans use in observing scenes and objects, and demonstrates the construction of combined models of low-level saliency and high-level object-based information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge