Real-Time Semantic Segmentation using Hyperspectral Images for Mapping Unstructured and Unknown Environments

Paper and Code

Mar 27, 2023

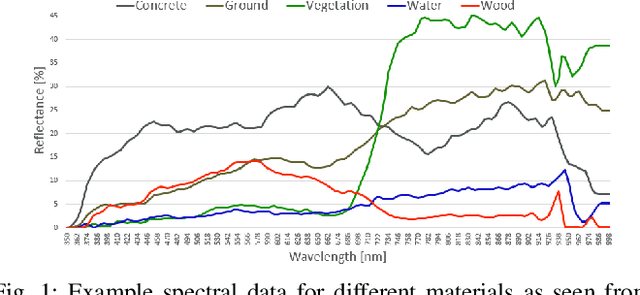

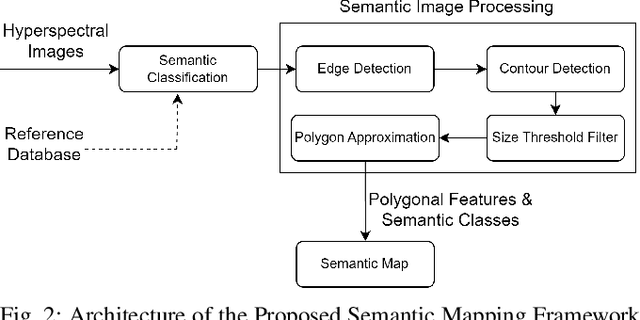

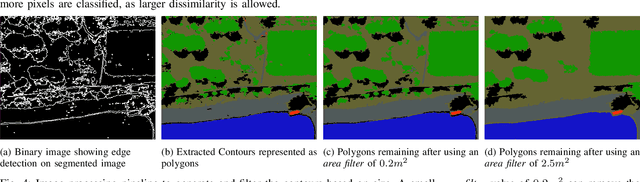

Autonomous navigation in unstructured off-road environments is greatly improved by semantic scene understanding. Conventional image processing algorithms are difficult to implement and lack robustness due to a lack of structure and high variability across off-road environments. The use of neural networks and machine learning can overcome the previous challenges but they require large labeled data sets for training. In our work we propose the use of hyperspectral images for real-time pixel-wise semantic classification and segmentation, without the need of any prior training data. The resulting segmented image is processed to extract, filter, and approximate objects as polygons, using a polygon approximation algorithm. The resulting polygons are then used to generate a semantic map of the environment. Using our framework. we show the capability to add new semantic classes in run-time for classification. The proposed methodology is also shown to operate in real-time and produce outputs at a frequency of 1Hz, using high resolution hyperspectral images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge