RDE: A Hybrid Policy Framework for Multi-Agent Path Finding Problem

Paper and Code

Nov 03, 2023

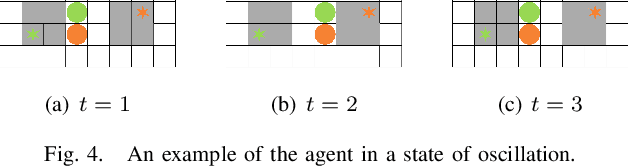

Multi-agent path finding (MAPF) is an abstract model for the navigation of multiple robots in warehouse automation, where multiple robots plan collision-free paths from the start to goal positions. Reinforcement learning (RL) has been employed to develop partially observable distributed MAPF policies that can be scaled to any number of agents. However, RL-based MAPF policies often get agents stuck in deadlock due to warehouse automation's dense and structured obstacles. This paper proposes a novel hybrid MAPF policy, RDE, based on switching among the RL-based MAPF policy, the Distance heat map (DHM)-based policy and the Escape policy. The RL-based policy is used for coordination among agents. In contrast, when no other agents are in the agent's field of view, it can get the next action by querying the DHM. The escape policy that randomly selects valid actions can help agents escape the deadlock. We conduct simulations on warehouse-like structured grid maps using state-of-the-art RL-based MAPF policies (DHC and DCC), which show that RDE can significantly improve their performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge